A straightforward way to achieve this function using C code would look like this :

while (*(bytePos+len) == *(ComparePos+len)) len++;It works well, and is trivial to understand. However, we are giving away a critical property of modern CPU : they can process whole WORD in a single step. For 32 bits CPU, it means that 4 Bytes could be compared in a single instruction. This is expectedly faster than loading and comparing 4 times each single byte.

An optimised comparison function then becomes like this ;

while (*(int32*)(bytePos+len) == *(int32*)(ComparePos+len)) len+=4;

if (*(int16*)(bytePos+len) == *(int16*)(ComparePos+len)) len+=2;

if (*(bytePos+len) == *(ComparePos+len)) len++;While more complex, this version will provide better performance, especially for longer matches. It has however two drawbacks.

The first problem comes from the very need to compare int32 WORD values. Most modern CPU will have no problem with that, but some, such as ARM, will require these values to be aligned. This means that the WORD value must start at a boundary which is a multiple of 4. Well, since compression algorithms have to compare sequences which start at arbitrary position, this condition cannot be accommodated. ARM will have to live with the simpler comparison loop.

The second problem comes from the trailing comparisons. On leaving the main loop, since we know that we have less than 4 identical bytes, we still need to check if they are 0, 1, 2 or 3. This can be optimally achieved by using 2 comparisons.

Not bad, but still, it means there is a minimum of 3 comparisons to exit this function, and comparisons aren't good for CPU pipelines, especially unpredictable ones. The CPU will try to "guess" the result of these comparisons, in order to keep its pipeline busy by speculatively executing the next instructions. But if it fails, it will have to stall and flush the whole pipeline, resulting in significant performance penalty.

For this very reason, it can be preferable to use mathematical formulas to get the same result, even when they are more complex, since they avoid branching, ensuring a predictable CPU throughput.

In our situation, we want to get rid of the trailing comparisons and end up with a mathematical formula which gives us the number of continuous identical bytes, starting from the first one. We'll consider that we live in a little endian world in the next part of this post, then the first byte becomes the lowest one.

We know that at least one bit is different, since otherwise we would still be in the main loop.

To find this bit, we will use a simple XOR operation between the compared sequences :

difference = *(int32*)bytePos ^*(int32*)comparePos;

If (difference==0), then both sequences are identical.

Since they are not, one bit 1 at least exists. Finding how many bytes are identical between the 2 sequences is now equivalent to finding the rank of the smallest bit 1.

A naive strategy to find lowest bit 1 rank would be to test bits recursively, such as :

while ((difference&1)==0) { difference>>=1; rank++; }

But obviously, this is not going to be our strategy, since we end up with even more comparisons than we started with.

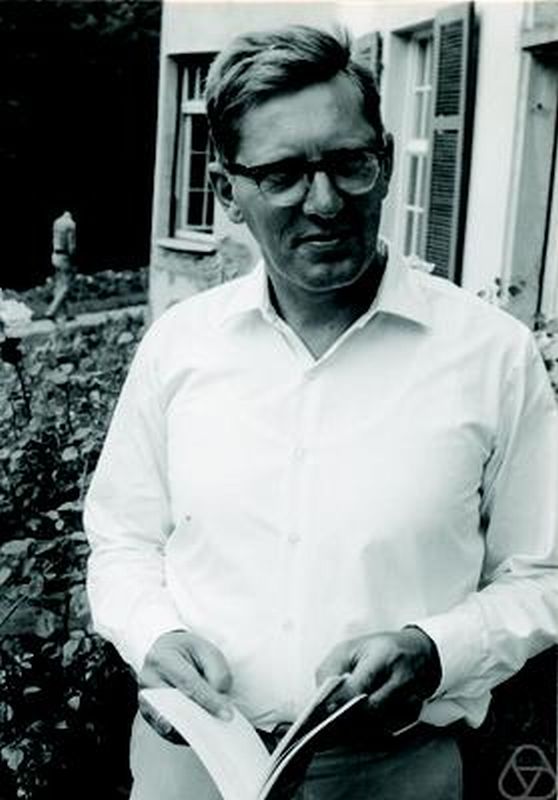

Enters Nicolaas De Bruijn.

The De Bruijn sequence will help us to transform this problem into a mathematical formula. It states that, given an alphabet A with k elements, there is at least one cyclic sequence C within which any possible sub-sequence of size n using alphabet A exists exactly once. It even provides a methodology to build one.

Such a cyclic sequence can become terribly large. We are lucky that for computers, A is just a 2 elements alphabet, bits1 & 0. But we still need to manage n.

We'll do so by keeping only the lowest bit 1 from the xor'ed difference vector. It can be achieved thanks to a simple trick :

LowestBitOne = difference & -difference;

It works because we are in a two's complement world. To summarize, given a value (i), its negative value (-i) can be obtained by inverting all bits, and then adding 1. As a consequence, all bits will be set to zero (since 1 & 0 = 0) except the last (lowest) bit 1, due to the +1.

Now, only the lowest bit 1 remains, which means we have only 32 possible values to consider, and they are all 2^N.

Thanks to the De Bruijn theorem, we now can map these 32 values into a small table of size 32.

We will create a DeBruijn bit sequence which maps all values from 0 to 31 into it (n=5). Since multiplying by 2^N is equivalent to left-shifting by N, the analogy with DeBruijn becomes obvious : we want a bit sequence which, when shifted left bit by bit, produces all possible values between 0 and 31 exactly once.

This image provides a construction method to build such a bit sequence, based on Hamiltonian path. It's a bit complex, so here is one such solution for 5 bits :

00000111011111001011010100110001

In theory, the sequence is supposed to be cyclic, but since we will only shift left, we fill the higher bits with 0 in order to "simulate" cyclic behavior.

It might not look obvious that all values from 0 to 31 are present in this chain, so you can also try it for yourself to verify it.

Knowing the serie generated by shifting the above De Bruijn sequence is now equivalent to knowing the shift which was used. So it provides the result we are looking for with a simple table lookup :

DeBruijnIndex = (0x077CB531 * LowestBitOne) >> 27;

Result = DeBruijnTable[DeBruijnIndex];

And here we are, we have changed 2 comparisons into 3 mathematical operations and one table lookup. Is that a gain ?

Well, according to LZ4 latest benchmark, it seems it is. Gains vary from negligible to measurable, but always positive, so on average it seems a step into the right direction.

This trick is now integrated into LZ4 r41 and later versions.