After a very fast evaluation, LZ4 has been recently integrated into the Apache project Hadoop - MapReduce.

This is an important news, since, in my humble opinion, Hadoop is among the most advanced and ambitious projects to date (an opinion which is shared by some). It also serves as an excellent illustration of LZ4 usage, as an in-memory compression algorithm for big server applications.

But first, a few words on Hadoop.

By 2005, Google shook the IT world by presenting Big Table, its home-grown distributed database with eventual consistency, able to store virtually the entire web and queries it. BigTable was built on top of Google FS, a virtual file system covering the entire planet, tens of thousands of computers distributed in hundreds of datarooms all over the world, as if it was a single massive one. This limitless amount of stored data could then be processed in parallel, typically for query preparation, thanks to the MapReduce framework, which allows to process petabytes of data in a small amount of time (if you can afford the number of servers necessary for that).

At this very moment, Google stealed the crown of programmatic champion from Microsoft. It was now clear that they were setting the future. Although most of these technologies were already studied, it was the first time they were executed together and so well, at such a huge scale for commercially available products. This gave Google literally years of advance over the competition, since most of its Web products were based on these capabilities.

Since then, all other "big names" of IT, (namely Yahoo, Facebook, Amazon, IBM, Microsoft, Apple, etc.) have been willing to duplicate this architecture. The result of all these efforts finally converged into the open-source project Hadoop.

Hadoop now has most of the capabilities presented in 2005 by Google, including a Distributed File storage system (HDFS), a distributed Database (HBase), and the same distributed-computing framework as Google, MapReduce.

So, where does that leave any place for LZ4 ?

Well, in such architecture, compression is used as a performance enabler.

As can be guessed, massive amounts of data are traveling between the different nodes. Moreover, each node is also processing a fair amount of data, more or less permanently.

In such situations, compression offers some advantages : less data to transfer means less time and cost to send/receive it. It also means that more data can be stored into RAM memory, or that more data can remain into the CPU cache. All this translates into better system speed.

Or does it ? For this affirmation to be true, it is mandatory for the compression algorithm to be "unobtrusive", which means it should consume very little CPU cycles. Otherwise, the cost of compression can void or reverse the speed advantage. This basically means only fast compressors do qualify for the job.

In the beginning, LZO was such a champion. It offered great speed, however with some important usage limitations, due to its GPL license.

Then early 2011, Google released Snappy, ex-zippy, the very same algorithm used by Google in its own BigTable implementation. It quickly became a great alternative, thanks to its better licensing policy and better performance.

LZ4 was also released this year, just after Snappy. Google's notoriety means there was basically little attention left for competing algorithms. But it also raised awareness that Fast compression algorithms have a role in IT architecture. LZ4 gradually improved overtime, to the point of providing now better performance than Google's creation. One Hadoop's contributors, Binglin Chang, made the effort to implement LZ4 as a JNI patch, and compare it directly to Snappy. LZ4 performance was found better than Snappy, even when using Snappy's own set of calibration tests.

In a relatively quick decision process, the LZ4 patch was then integrated into the main Hadoop - MapReduce source trunk.

/* Update : Google's Snappy developer kindly reminds that benchmark figures depend on the tested configuration, and states that on their own test platform, Snappy keeps an edge with regards to compression speed. See comment : http://fastcompression.blogspot.com/2011/12/lz4-into-hadoop-mapreduce.html?showComment=1326071743955#c7649536680897239608 */

The advantage of using fast compression algorithms, as does Hadoop, can be replicated into many server-side applications, for example DataBases. Recently, column-oriented databases have been dragging attention, since they make heavy usage of compression to grab some impressive performance advantage. The idea remains the same : compress data to keep more of it into RAM and into CPU cache : it directly translates into better performance.

Featured

▼

Monday, December 19, 2011

Monday, December 12, 2011

Advanced Parsing Strategies

Getting better compression ratio with LZ algorithms requires more than looking for long matches. It also requires some "parsing".

To explain it simply, one would assume that once he finds a "best possible match" at current position, he just has to encode it and move forward.

But in fact, there are better possibilities. The naive encoding strategy is called "greedy match". A proven better proposition is to check if, at next position, a better (i.e. longer) match exists. If it does, then the current match is dropped in favor of the next one. This is called "lazy match", and typically improves the compression ratio by 1-2%, which is not bad considering that the compression format remains unaffected.

Lazy match is simple enough to understand, and clearly illustrates the trade-off at stake : compute more searches, in order to find better (longer) matches and improve compression ratio.

On the other extreme side, there is a strategy called "Optimal parsing", which consists in computing a serie of matches at each position, and then select the best ones by a reverse traversal computation (like a "shortest path" algorithm). Optimal parsing requires some huge computation, especially at search step, since each and every position must be fully verified with a complete list of match candidates in ascending order.

Not willing to pay too much on the CPU side, i've tried to find a middle way, which would mostly emulate the "optimal parsing" result but with a CPU cost closer to Lazy match.

The main ideas is as follows :

Suppose i'm getting a match of length ML at position P.

The only reason i would not want to encode it immediately is if it exists a better match between P and P+ML.

We take the assumption that, at position P, we have found the best possible match. Then, the smallest possible "better match" must have a length ML+1 and start and position P+1.

Consequently, the "smallest and closest better match" must end at position (P+1) + (ML+1) = P+ML+2.

Therefore, should such a better match exist, i'll find it by searching for the sequence stored at position P+ML+2-MINMATCH. Moreover, should any other better match exist, it will necessary include the sequence stored at position P+ML+2-MINMATCH. So i will get all of them, and consequently the best of them, by simply searching for this sequence.

Compared to Optimal Parsing, which requires a full search at each position, this is a huge speed improvement.

However, the search is more complex than it sounds. To begin with, the "longest match" may start at any position between P and P+ML+2-MINMATCH. To find it, it will be necessary to check the match length in both forward and backward direction.

It means it's not possible to use advanced match finders, such as BST or MMC, which tend to eliminate unpromising candidates. It's not suitable here, since the total match length may be achieved thanks to backward direction. Therefore, each and every stream which contains the searched sequence must be checked.

In these circumstances, search algorithm is basically limited to Hash Chain, which is only suitable for "short range" searches. So, it's a first limitation.

If no better match is found, i can safely write the current best match, and then start again the search.

If a better match is found, it means we have overlapping matches, with the second one being better than the first. It would be tempting to simply shorten the first match, in order to "make room" for the longer one, and then start again the process, but alas it's more complex than that. This decision will depend on P3.

Let's analysed the situation more thoroughly.

We have 3 overlapping matches, at positions P1, P2, & P3, with :

ML3 > ML2 > ML1

P2 <= E1 (=P1+ML1)

P3 <= E2 (=P2+ML2)

If P3<E1, then P2 is discarded, P3 becomes P2, and we search a new P3.

If P3=E1, then P1 can be encoded, P2 is discarded, P3 becomes P1, and we continue the search.

Obviously, the situation is less trivial in the more common situation when P3 > E1.

If P3 < E1 + VerySmallLength, then it's very probable that P2 will not pay for itself. A match costs an offset and a length, this can cost more than a few literals. Dealing with this case is almost equivalent to P3=E1, so we'll consider it closed.

If P3-P2 > ML1, then we know that the 2nd match will be longer than the 1st one. So we can proceed with shortening P1 length to ML1=P2-P1. Then we can encode P1, P2 becomes P1, P3 becomes P2, and we continue the search.

So now we are left with the difficult case. We have 3 consecutive matches, the limit between P3 and P2 is not yet settled (we would need P4 to decide), and depending on this decision, it impacts the limit between P1 and P2.

Let's give an example :

P1 = 0

ML1 = 8

E1 = P1 + ML1 = 8

P2 = 5

ML2 = 10

E2 = P2 + ML2 = 15

P3 = 12

ML3 = 12

P3-E1 = 4 : This is enough to "pay for P2"

But should i do :

ML1 = 8 ; ML2 = 4

or

ML1 = 5; ML2 = 7 ?

They look equivalent, but they are not, since the entropy difference between 4 and 5 is likely to be higher than the entropy difference between 7 and 8.

Therefore, if we keep the objective of a total length of 12, the first choice is the best one.

However, it's not yet sure that P3 will effectively starts at 12. It all depends on P4, which is not yet known.

For example, if P4=E2=15, then obviously P3 disappears, and the better choice is ML1 = 5, ML2 = 10.

But, if no P4 is found, then P3=12, and the better choice is ML1 = 8, ML2 = 4.

So, if we want to decide now, we have to accept that the best choice is not yet known, and we have to settle with something sub-optimal. As we are already working on an approximation, this should not be considered a big issue, as long as the approximation is "good enough".

For programming simplification, we'll assume that the second solution is the better one, shortening ML1 as is necessary to make room for P2.

It means we can now encode P1. P2 becomes P1, P3 becomes P2, and we continue the process.

So here we have an algorithm, which tries to create a "nearly optimal" match distribution, taking local decisions to favor longer matches.

As a great property, it searches only N+1 list of matches per serie of N consecutive matches, which is a great time saver compared to Optimal Parsing.

Time for some evaluation. How well does it fare ?

Well, compared to the deep lazy match strategy implemented into Zhuff (-c2 mode), surprisingly little. The compression ratio barely improved by about 1%. At least, it's a gain...

There are several reasons to explain this disappointing result.

First, the deep lazy match strategy of Zhuff v0.8 -c2 is already an advanced parsing algorithm. It heavily favors larger matches, and is complemented by a small memory to re-fill skipped space. So it's pretty good actually, which limits remaining potential savings.

But the Deep Lazy match strategy of Zhuff 0.8 requires on average 3xN forwards searches per N match. This is in contrast with the strategy in this post, which only requires N+1 searches, albeit more complex ones (forwards & backward). As a consequence, the new strategy is 50% faster than the previous deep lazy match one. Now it looks better.

Second, the proposed strategy is only based on maximizing match length, deliberately forgetting any other cost contributor, such as, for example, offset length.

In a variable offset representation, small offsets are very likely to cost less, if not much less than larger ones. It's still possible to use this property "tactically", by using the smallest known offset able to provide the selected match length, but that's just an opportunistic gain, not an advantage that the selection algorithm takes into consideration.

Moreover, the full list of candidates can only be built for the first position P1. For the next ones (P2, P3), only a reduced list is likely to be found, since we learn P2 and P3 positions during the backward search. As a consequence, quite frequently, only a single offset is known (the longest one). This further limits the "opportunistic" offset selection gain.

For Zhuff, the offset cost vary from 9 to 22 bits. More realistically, it hovers between 12 and 21 bits. That's more than one byte of difference. Knowing that a literal is also compressed, with an average cost of about 6 bits per literal (depending on source file), then a long offset costs quite more than a small offset + 1 literal.

In fact, to select the better choice, it would be necessary to properly estimate, at each step, the cost of each contributor, offset, match length, literals and flags.

Well, that's the whole point of Optimal Parsing...

Sunday, December 4, 2011

Fast sequence comparison

A straightforward way to achieve this function using C code would look like this :

while (*(bytePos+len) == *(ComparePos+len)) len++;It works well, and is trivial to understand. However, we are giving away a critical property of modern CPU : they can process whole WORD in a single step. For 32 bits CPU, it means that 4 Bytes could be compared in a single instruction. This is expectedly faster than loading and comparing 4 times each single byte.

An optimised comparison function then becomes like this ;

while (*(int32*)(bytePos+len) == *(int32*)(ComparePos+len)) len+=4;

if (*(int16*)(bytePos+len) == *(int16*)(ComparePos+len)) len+=2;

if (*(bytePos+len) == *(ComparePos+len)) len++;While more complex, this version will provide better performance, especially for longer matches. It has however two drawbacks.

The first problem comes from the very need to compare int32 WORD values. Most modern CPU will have no problem with that, but some, such as ARM, will require these values to be aligned. This means that the WORD value must start at a boundary which is a multiple of 4. Well, since compression algorithms have to compare sequences which start at arbitrary position, this condition cannot be accommodated. ARM will have to live with the simpler comparison loop.

The second problem comes from the trailing comparisons. On leaving the main loop, since we know that we have less than 4 identical bytes, we still need to check if they are 0, 1, 2 or 3. This can be optimally achieved by using 2 comparisons.

Not bad, but still, it means there is a minimum of 3 comparisons to exit this function, and comparisons aren't good for CPU pipelines, especially unpredictable ones. The CPU will try to "guess" the result of these comparisons, in order to keep its pipeline busy by speculatively executing the next instructions. But if it fails, it will have to stall and flush the whole pipeline, resulting in significant performance penalty.

For this very reason, it can be preferable to use mathematical formulas to get the same result, even when they are more complex, since they avoid branching, ensuring a predictable CPU throughput.

In our situation, we want to get rid of the trailing comparisons and end up with a mathematical formula which gives us the number of continuous identical bytes, starting from the first one. We'll consider that we live in a little endian world in the next part of this post, then the first byte becomes the lowest one.

We know that at least one bit is different, since otherwise we would still be in the main loop.

To find this bit, we will use a simple XOR operation between the compared sequences :

difference = *(int32*)bytePos ^*(int32*)comparePos;

If (difference==0), then both sequences are identical.

Since they are not, one bit 1 at least exists. Finding how many bytes are identical between the 2 sequences is now equivalent to finding the rank of the smallest bit 1.

A naive strategy to find lowest bit 1 rank would be to test bits recursively, such as :

while ((difference&1)==0) { difference>>=1; rank++; }

But obviously, this is not going to be our strategy, since we end up with even more comparisons than we started with.

Enters Nicolaas De Bruijn.

The De Bruijn sequence will help us to transform this problem into a mathematical formula. It states that, given an alphabet A with k elements, there is at least one cyclic sequence C within which any possible sub-sequence of size n using alphabet A exists exactly once. It even provides a methodology to build one.

Such a cyclic sequence can become terribly large. We are lucky that for computers, A is just a 2 elements alphabet, bits1 & 0. But we still need to manage n.

We'll do so by keeping only the lowest bit 1 from the xor'ed difference vector. It can be achieved thanks to a simple trick :

LowestBitOne = difference & -difference;

It works because we are in a two's complement world. To summarize, given a value (i), its negative value (-i) can be obtained by inverting all bits, and then adding 1. As a consequence, all bits will be set to zero (since 1 & 0 = 0) except the last (lowest) bit 1, due to the +1.

Now, only the lowest bit 1 remains, which means we have only 32 possible values to consider, and they are all 2^N.

Thanks to the De Bruijn theorem, we now can map these 32 values into a small table of size 32.

We will create a DeBruijn bit sequence which maps all values from 0 to 31 into it (n=5). Since multiplying by 2^N is equivalent to left-shifting by N, the analogy with DeBruijn becomes obvious : we want a bit sequence which, when shifted left bit by bit, produces all possible values between 0 and 31 exactly once.

This image provides a construction method to build such a bit sequence, based on Hamiltonian path. It's a bit complex, so here is one such solution for 5 bits :

00000111011111001011010100110001

In theory, the sequence is supposed to be cyclic, but since we will only shift left, we fill the higher bits with 0 in order to "simulate" cyclic behavior.

It might not look obvious that all values from 0 to 31 are present in this chain, so you can also try it for yourself to verify it.

Knowing the serie generated by shifting the above De Bruijn sequence is now equivalent to knowing the shift which was used. So it provides the result we are looking for with a simple table lookup :

DeBruijnIndex = (0x077CB531 * LowestBitOne) >> 27;

Result = DeBruijnTable[DeBruijnIndex];

And here we are, we have changed 2 comparisons into 3 mathematical operations and one table lookup. Is that a gain ?

Well, according to LZ4 latest benchmark, it seems it is. Gains vary from negligible to measurable, but always positive, so on average it seems a step into the right direction.

This trick is now integrated into LZ4 r41 and later versions.

Monday, November 28, 2011

Zhuff get upgraded (v0.8)

As a first implementation of the recently proposed compressed stream format, here comes a new version of Zhuff, v0.8. It was indeed my main target, since the previous format was incompatible by design with Pipe mode.

Therefore, this new version comes with all the features recently introduced into LZ4, such as :

- All compression levels into a single binary (this removes the need for separate Zhuff-HC and Zhuff-Max binaries)

- Pipe mode support

- Windows Installer

- Context Menu integration (windows installer version only)

- Directory Compression with shar (windows installer version only)

and some other minor improvements :

- New benchmark mode with multiple files

- Overwrite confirmation mode

- Silent/Verbose modes

- Pause on exit

Zhuff algorithm itself got a small change from v0.7.

It introduces an early "entropy estimator", which tries to evaluate if it is worthwhile to compress a sub-stream using entropy coder Huff0. It works pretty well, and save some CPU cycles, however effect remains small, barely noticeable, at about 2-3% more speed.

Therefore, this version main focus is about bringing more features.

You can download it here.

Thursday, November 24, 2011

A format for compressed streams, part 2

Following my recent post, here is what i could come up with after a few minutes of thinking : 2 bytes might be enough for my need.

Well, mostly. To begin with, a magic number is still necessary to distinguish a valid stream from random garbage. 4 bytes seems the standard way to go for that role. By using an unusual bit layout, it should make stream confusion pretty rare (almost 1 in 2^32= 4 billions if correctly selected).

Since a stream identity must lead to a compatible compression decoder, this gave me the idea of merging decoder version with magic number. The more the number of supported versions, the less efficient is the 4 bytes header at distinguishing "garbage" streams. But well, since we start at 32 bits, even a reduced identifier length (28-30 bits) should do its job properly.

The next thing we need is a header version. This is different from decoder version, since we may add new properties to the stream format while not modifying the compression decoder itself. It's impossible to guess how many versions will be developed in the future, so let's settle with 2 bits for now (4 values) and reserve a 3rd bit just in case. Even if we run out of version number, it will be possible to use one of the last values to add a few bytes to the header, where version number can be extended.

Next thing, we need to retrieve the size of the (uncompressed) stream. Well, if it can be known. That's not so sure by the way, since in a pipe scenario, there is no way to "probe" for the size of the input stream.

So there is a bit to tell if the stream size is provided into the header. By the way, how much bytes should be used for this size ? 8 Bytes is all around (that's 16 Exabytes, no less), but maybe too much in most circumstances, where 4-bytes (4 Gigabytes) is good enough. So i'll use 2 bits to distinguish between "No Size provided", 8-Bytes size and 4-Bytes size. While at it, since I've one more available value, i'll open the possibility for 2-Bytes size (64KB).

OK, so now, maybe the stream is cut into multiple independent pieces, called "chunks", for easier multi-threading and random access ? I need another bit to tell.

If there are several "chunks", what are their size ?

For simplicity, in this first version, i will only allow identical "uncompressed" chunk sizes into a single stream. But which one ?

Although I've got my own idea for file-compression scenario with Zhuff compression, maybe some users will have different needs. The smaller the chunk size, the worse the compression ratio, but the better for memory usage and random access. So, in order to open the stream container format to new usage scenarios, i'm willing to take a risk, and make chunk size selectable.

What values should be authorized ?

For a first version, a sensible simplification is to only allow sizes which are power of 2, so a few bits will be enough.

Anyway, a "chunk size" should not be too small, otherwise compression ratio can be so bad that it is hardly effective. So let's say that the minimum chunk size shall be 1KB.

OK, so if we use "chunk size = 0" to tell that there is no chunk (single continuous stream), in 4 bits, it is possible to represent any 2^n value from 1KB to 16MB, which seems enough for my needs. Let's keep a 5th bit in reserve, just in case new usages require larger chunks.

Fine, I've got my main stream parameters. I still need some bits for other planned functions, such as the presence of an offset table for random access, header and data chunk checksums, and alignment rules. But there are still enough bits for that within a 2 bytes option header.

So, it gives :

4 Bytes : Base Magic Number + Decoder Version

2 Bytes : Options (detailed)

- bits 0-1 : Header Version

- bit 2 : Reserved (extended version number) (must be zero)

- bits 3-6 : Chunk Size (or no chunk)

- bit 7 : Reserved (extended chunk size) (must be zero)

- bits 8-9 : Stream Uncompressed Size format (0, 2, 4 or 8 Bytes)

- bits 10-15 : Reserved (Offset Table, Checksums, Alignment) (must be zero)

0, 2, 4 or 8 Bytes : Stream Uncompressed Size

Then repeat N Times (with N = Number of chunks)

4 Bytes : Compressed Chunk Size

Data Chunk

So far so good. This format even allows me to naturally handle non-compressed chunks : if "compressed chunk size = uncompressed chunk size", then obviously chunk is uncompressed.

But a non trivial difficulty emerges : should the Stream Uncompressed Size be unknown, what happens for the last chunk ?

Well, in this situation, the last chunk's uncompressed size is unknown.

This does not happen if we know Stream Uncompressed Size : we can automatically calculate the number of chunks, and the uncompressed size of the last chunk.

To solve this situation, i will "flag" the last chunk. Let's use the highest bit for the flag. In this case, "compressed chunk size" will be followed by a 4-Bytes value, which is the "uncompressed chunk size". Problem solved.

[Edit]

Another trick regarding the last chunk would be to detect its end simply because we reach the end of input (EOF marker). Then, no flag would be needed. The "compressed size" could also be easily calculated, since the end of the compressed stream is known. It would save a few bytes.

The problem is, it only works if the stream is the only data into the file or the pipe. Should it be followed by any other data, this detection method would not work.

Could that happen ? Surely. For instance, multiple streams are stored into a single archive file. A potential way to solve the problem is then to require the "higher" format to know the size of each compressed streams. It would then be in charge to tell it to the stream decoder.

Such capability seems common for an encapsulating format, but i feel nonetheless uneasy with the requirement...

Well, mostly. To begin with, a magic number is still necessary to distinguish a valid stream from random garbage. 4 bytes seems the standard way to go for that role. By using an unusual bit layout, it should make stream confusion pretty rare (almost 1 in 2^32= 4 billions if correctly selected).

Since a stream identity must lead to a compatible compression decoder, this gave me the idea of merging decoder version with magic number. The more the number of supported versions, the less efficient is the 4 bytes header at distinguishing "garbage" streams. But well, since we start at 32 bits, even a reduced identifier length (28-30 bits) should do its job properly.

The next thing we need is a header version. This is different from decoder version, since we may add new properties to the stream format while not modifying the compression decoder itself. It's impossible to guess how many versions will be developed in the future, so let's settle with 2 bits for now (4 values) and reserve a 3rd bit just in case. Even if we run out of version number, it will be possible to use one of the last values to add a few bytes to the header, where version number can be extended.

Next thing, we need to retrieve the size of the (uncompressed) stream. Well, if it can be known. That's not so sure by the way, since in a pipe scenario, there is no way to "probe" for the size of the input stream.

So there is a bit to tell if the stream size is provided into the header. By the way, how much bytes should be used for this size ? 8 Bytes is all around (that's 16 Exabytes, no less), but maybe too much in most circumstances, where 4-bytes (4 Gigabytes) is good enough. So i'll use 2 bits to distinguish between "No Size provided", 8-Bytes size and 4-Bytes size. While at it, since I've one more available value, i'll open the possibility for 2-Bytes size (64KB).

OK, so now, maybe the stream is cut into multiple independent pieces, called "chunks", for easier multi-threading and random access ? I need another bit to tell.

If there are several "chunks", what are their size ?

For simplicity, in this first version, i will only allow identical "uncompressed" chunk sizes into a single stream. But which one ?

Although I've got my own idea for file-compression scenario with Zhuff compression, maybe some users will have different needs. The smaller the chunk size, the worse the compression ratio, but the better for memory usage and random access. So, in order to open the stream container format to new usage scenarios, i'm willing to take a risk, and make chunk size selectable.

What values should be authorized ?

For a first version, a sensible simplification is to only allow sizes which are power of 2, so a few bits will be enough.

Anyway, a "chunk size" should not be too small, otherwise compression ratio can be so bad that it is hardly effective. So let's say that the minimum chunk size shall be 1KB.

OK, so if we use "chunk size = 0" to tell that there is no chunk (single continuous stream), in 4 bits, it is possible to represent any 2^n value from 1KB to 16MB, which seems enough for my needs. Let's keep a 5th bit in reserve, just in case new usages require larger chunks.

Fine, I've got my main stream parameters. I still need some bits for other planned functions, such as the presence of an offset table for random access, header and data chunk checksums, and alignment rules. But there are still enough bits for that within a 2 bytes option header.

So, it gives :

4 Bytes : Base Magic Number + Decoder Version

2 Bytes : Options (detailed)

- bits 0-1 : Header Version

- bit 2 : Reserved (extended version number) (must be zero)

- bits 3-6 : Chunk Size (or no chunk)

- bit 7 : Reserved (extended chunk size) (must be zero)

- bits 8-9 : Stream Uncompressed Size format (0, 2, 4 or 8 Bytes)

- bits 10-15 : Reserved (Offset Table, Checksums, Alignment) (must be zero)

0, 2, 4 or 8 Bytes : Stream Uncompressed Size

Then repeat N Times (with N = Number of chunks)

4 Bytes : Compressed Chunk Size

Data Chunk

So far so good. This format even allows me to naturally handle non-compressed chunks : if "compressed chunk size = uncompressed chunk size", then obviously chunk is uncompressed.

But a non trivial difficulty emerges : should the Stream Uncompressed Size be unknown, what happens for the last chunk ?

Well, in this situation, the last chunk's uncompressed size is unknown.

This does not happen if we know Stream Uncompressed Size : we can automatically calculate the number of chunks, and the uncompressed size of the last chunk.

To solve this situation, i will "flag" the last chunk. Let's use the highest bit for the flag. In this case, "compressed chunk size" will be followed by a 4-Bytes value, which is the "uncompressed chunk size". Problem solved.

[Edit]

Another trick regarding the last chunk would be to detect its end simply because we reach the end of input (EOF marker). Then, no flag would be needed. The "compressed size" could also be easily calculated, since the end of the compressed stream is known. It would save a few bytes.

The problem is, it only works if the stream is the only data into the file or the pipe. Should it be followed by any other data, this detection method would not work.

Could that happen ? Surely. For instance, multiple streams are stored into a single archive file. A potential way to solve the problem is then to require the "higher" format to know the size of each compressed streams. It would then be in charge to tell it to the stream decoder.

Such capability seems common for an encapsulating format, but i feel nonetheless uneasy with the requirement...

Friday, November 18, 2011

A format for compressed streams

I've been pretty careful at designing compression format & algorithm, but much less detailed when it comes to compressed stream format. A good example of this situation is given in this post, where LZ4 compressed format is precisely defined, but not a single word is mentioned regarding the stream format generated by LZ4.exe.

But there is a difference. The stream format has more responsibilities than "only" compression.

For a simple example, a stream typically starts with a "Magic Number", which hints the decoder that the stream being decoded is most probably a valid one : if the magic number does not match, then what follows will be nonsense to the decoder. It's one of many ways to avoid some basic error, such as feeding the decoder with random data.

Format definitions are very important, since once settled, they are keys to create compatible applications. Therefore, once published, a format should either not change, or guarantee that any future change will not break existing applications. This is called forward and backward compatibility, and is not trivial.

Since a great deal of attention must be pushed into this definition, instead of rushing one, i want to underline in this post what are the objectives of the format, and how they are achieved, in an attempt to both clarify my mind and get comments.

First definition : what is a stream ?

At its most basic level, a file satisfy the definition of a stream. These are ordered bytes, with a beginning and an end.

File is in fact a special stream with added properties. A file size can to be known beforehand. And in most circumstances, it is likely to be stored into a seekable media, such as an HDD.

But a stream is not limited to a file. It can also be a bunch of files grouped together (tar), or some dynamically generated data. It may be read from a streaming media, such as pipe, with no seek capability (no way to "jump" forward or backward) and no prior knowledge of its size : we'll learn that the stream is finished on hitting its end.

OK, so now we are getting into the "hard" part : what are the missions of the Compressed Stream Format ?

Here is a list, from the top of my mind, sorted in priority order :

1) Be compatible with both streaming and seekable media, in both directions (compression and decompression)

2) Detect valid streams from invalid ones

3) Designed for backward & forward compatibility

4) Control data validity (e.g. checksum)

5) Optionally slice the stream into multiple independent "chunks", for multi-threading purpose

6) Offer user control over chunk size

7) Allow to quick-jump to a specific location into the stream (seekable media only)

8) Provide a way to correct transmission errors, or at least retrieve as much data as possible from a transmission error

9) Enforce alignment rules

Options 1 to 5 seem really compulsory, while beyond that point, they may be questionable.

There are other missions which i'm still unsure if they should join the list.

For example, should the stream format embed a definition of the compression algorithm ?

That's not what i'm doing currently : the "magic number" is also associated to a specific family of compatible decoders, and therefore already performs that role.

Should it allow some user comments ?

Here, i'm expecting this feature to rather be part of an Archive format.

What about file names ? Should there be a place for them into the format ?

In my opinion, this is exactly the role of an Archive format, listing, sorting, placing files names and directory structure. Furthermore, embedding a name into a compressed stream format disregards the fact that some streams are not single files.

But there is a difference. The stream format has more responsibilities than "only" compression.

For a simple example, a stream typically starts with a "Magic Number", which hints the decoder that the stream being decoded is most probably a valid one : if the magic number does not match, then what follows will be nonsense to the decoder. It's one of many ways to avoid some basic error, such as feeding the decoder with random data.

Format definitions are very important, since once settled, they are keys to create compatible applications. Therefore, once published, a format should either not change, or guarantee that any future change will not break existing applications. This is called forward and backward compatibility, and is not trivial.

Since a great deal of attention must be pushed into this definition, instead of rushing one, i want to underline in this post what are the objectives of the format, and how they are achieved, in an attempt to both clarify my mind and get comments.

First definition : what is a stream ?

At its most basic level, a file satisfy the definition of a stream. These are ordered bytes, with a beginning and an end.

File is in fact a special stream with added properties. A file size can to be known beforehand. And in most circumstances, it is likely to be stored into a seekable media, such as an HDD.

But a stream is not limited to a file. It can also be a bunch of files grouped together (tar), or some dynamically generated data. It may be read from a streaming media, such as pipe, with no seek capability (no way to "jump" forward or backward) and no prior knowledge of its size : we'll learn that the stream is finished on hitting its end.

OK, so now we are getting into the "hard" part : what are the missions of the Compressed Stream Format ?

Here is a list, from the top of my mind, sorted in priority order :

1) Be compatible with both streaming and seekable media, in both directions (compression and decompression)

2) Detect valid streams from invalid ones

3) Designed for backward & forward compatibility

4) Control data validity (e.g. checksum)

5) Optionally slice the stream into multiple independent "chunks", for multi-threading purpose

6) Offer user control over chunk size

7) Allow to quick-jump to a specific location into the stream (seekable media only)

8) Provide a way to correct transmission errors, or at least retrieve as much data as possible from a transmission error

9) Enforce alignment rules

Options 1 to 5 seem really compulsory, while beyond that point, they may be questionable.

There are other missions which i'm still unsure if they should join the list.

For example, should the stream format embed a definition of the compression algorithm ?

That's not what i'm doing currently : the "magic number" is also associated to a specific family of compatible decoders, and therefore already performs that role.

Should it allow some user comments ?

Here, i'm expecting this feature to rather be part of an Archive format.

What about file names ? Should there be a place for them into the format ?

In my opinion, this is exactly the role of an Archive format, listing, sorting, placing files names and directory structure. Furthermore, embedding a name into a compressed stream format disregards the fact that some streams are not single files.

Saturday, October 22, 2011

Progressive Hash Series : a new method to find repetitions

I've been investigating an old idea which was occupying my mind, in order to create a fast approximative match finder for long range searches. Although it took some time to "think" the problem correctly, in the end, the solution looks surprisingly simple.

The idea starts from a familiar structure, a Hash Table.

In order to create a fast look-up algorithm, it is just needed to hash the "minmatch" initial sequence, and store the position into the table. Later on, when checking for the same sequence, we'll find it in its cell.

OK, that's fine, but even without collisions, it does only provide us with a single answer, which is the closest sequence starting with minmatch bytes. But maybe, somewhere else farther, there is a better, i.e. longer match.

Looking at these other possibilities can be done in several ways. A simple method consists in linking all positions sharing the same hash value into a list. It's called Hash Chain, and it works fairly well. But if the sequence is really redundant, the number of positions searched can become terribly high, and with increased depth of search, it becomes prohibitive.

Hence the simple idea : in order to avoid waiting forever, the search is stopped after a number of attempts. The higher the number, the better the result. The lower the number, the faster the search. This is the trade-off.

Basically, it means that for large distance search, there is no shame in making a "partial search" in order to achieve acceptable speed.

OK, so since the number of searches can be arbitrarily limited, why not storing them in a table in the first place ? The row number is given by the hash, and all corresponding positions are orderly stored into the row. This is much faster to run, since there are less memory jumps, and easier to setup.

This method is not so new, and has been especially championed in the early 90's by Ross Williams, for its compressor LZRW-3a. Therefore we'll call it LZRW.

LZRW structure has in my opinion 2 advantages : one is controlled speed, through the size of rows (=Nb of attempts), and the other one is controlled memory, through the selection of table size.

This latest property is critical : most "full search" methods require a huge amount of memory to work properly. By "huge", i mean something in the range of 10x (with the exception of Hash Chains, but they are too slow for long distance searches anyway). So you want to search within a 256MB dictionary ? You need more than 2.5GB of memory. Farewell 32 bits systems.

One has to wonder : is it the right method ? Such amount of memory is simply prohibitive. Maybe by accepting a "less perfect" search but using less memory, we may nonetheless achieve a better compression rate thanks to the use of longer search distances. This is a position defended by Bulat Ziganshin, for its compressor FreeArc. For this scenario, LZRW comes as an obvious candidate : you can for example setup a 256MB search table, and use it to look into a 1GB dictionary. Now, long distance searches look affordable !

OK, so LZRW works, but there is no miracle : the longer the search, the more time it costs. In the end, the return on investment can be quite low, and with large number of searches (say for example 256), the search becomes so slow as becoming unpractical.

This is to be expected, all that is guaranteed by the table is that all elements share the same row, hence the same Hash Value. But that's it. So after finding an element with minmatch common bytes, we'll test another, and another, and so on. This is wasteful. What we want after finding minmatch bytes is to find another position with at least minmatch+1 common bytes.

In order to avoid testing each and every position, several methods are possible. One is to build a Binary Tree on top of the row. It is very efficient. A particularly good implementation of this idea was made by Christian Martelock for its RZM compressor (now discontinued). Unfortunately, it also means even more memory, consumed for the BT structure. And in the end, it is not so much different from a full BT structure over the entire search window, except that we lose full search capability.

OK i'm looking for something simpler. After checking for a minmatch candidate, i want to look for a minmatch+1 one, straight away. No search nor wandering.

This can be done quite simply : just hash the "minmatch+1" sequence, and store its position directly into an hash Table.

OK, so there are several tables you say, one per sequence size ?

Well, why ? No, a single one.

Just share the Hash Table among all the sequences. The simple idea here, is that a given position should be stored only once in the table, either with the hash of its "minmatch" first bytes, or "minmatch +1", or "minmatch+2", well whatever.

So, which sequence size should be stored ? Well, just start with the "minmatch" one. When a new equivalent sequence gets into the same table cell, we just move the old position at its "minmatch+1" hash, replacing its previous slot with the new position. And next time a "minmatch+1" sequence is found, we move the old sequence to "minmatch+2" and so on. So the position will move into the table, up to the point where it is considered "dead", either off limit, or because of elimination rule.

We obviously have to take into consideration the natural occurrence of collisions into this search structure. And it can happen between any sequence size. For example, a newer "minmatch" sequence could be willing to occupy the same slot as a different "minmatch+2" sequence. Well, one has to move on, or be eliminated.

That's where different rules can be applied. The most basic one is that the youngest position always win. It's good enough, and i recommend it. But it can also be mixed with some more complex heuristic, to avoid losing long-distance anchors too fast. Keeping in mind that this structure is created precisely to afford a partial search over a long distance, when there is not enough memory to keep one entry per position.

So we've got our basic idea here, a cross-over between cuckoo hashing and progressively longer hashed sequence. Hence the proposed name, Progressive Hash Series.

Now is time to put this idea into practice. I've been creating a simple long-range match finder based on greedy matching strategy. It's a simple and fast way to compare above methods. The file tested is enwik9, used into Matt's Mahoney LTCB. This file is very redundant, and particularly intensive for the match finder. LZRW and PHS are compared on their capacity to find longer matches (which translates into better compression), and on their respective speeds. Here are the results :

So what do we learn from these figures ?

First, PHS looks more efficient than LZRW. For an identical amount of memory, it converges faster towards optimal match length. This is particularly telling for the 4-attempts version, which is about has efficient as a 80-attempts LZRW.

Note however that for a given number of attempts, PHS is more complex, and requires more time. For example, the 4-attempts PHS is approximately the same speed as a 14-attempts LZRW. So, in the end, we've got the compression power of a 80-attempts LZRW for the cost of a 14-attempts one. It is still a gain.

This is my first review of this new method, and i guess we have only scratched the surface of it. Further refinements are to be expected ahead.

I have not found yet this idea described somewhere on the net. It is not even mentioned in the thorough Match Finder review by Charles Bloom. So, maybe, after all, this idea might be new.

[Edit] As specified by Charles Bloom, merging several hashes of different lengthes into the same table is not new (see comments). However, the update mechanism presented here might be.

The idea starts from a familiar structure, a Hash Table.

In order to create a fast look-up algorithm, it is just needed to hash the "minmatch" initial sequence, and store the position into the table. Later on, when checking for the same sequence, we'll find it in its cell.

OK, that's fine, but even without collisions, it does only provide us with a single answer, which is the closest sequence starting with minmatch bytes. But maybe, somewhere else farther, there is a better, i.e. longer match.

Looking at these other possibilities can be done in several ways. A simple method consists in linking all positions sharing the same hash value into a list. It's called Hash Chain, and it works fairly well. But if the sequence is really redundant, the number of positions searched can become terribly high, and with increased depth of search, it becomes prohibitive.

Hence the simple idea : in order to avoid waiting forever, the search is stopped after a number of attempts. The higher the number, the better the result. The lower the number, the faster the search. This is the trade-off.

Basically, it means that for large distance search, there is no shame in making a "partial search" in order to achieve acceptable speed.

OK, so since the number of searches can be arbitrarily limited, why not storing them in a table in the first place ? The row number is given by the hash, and all corresponding positions are orderly stored into the row. This is much faster to run, since there are less memory jumps, and easier to setup.

This method is not so new, and has been especially championed in the early 90's by Ross Williams, for its compressor LZRW-3a. Therefore we'll call it LZRW.

LZRW structure has in my opinion 2 advantages : one is controlled speed, through the size of rows (=Nb of attempts), and the other one is controlled memory, through the selection of table size.

This latest property is critical : most "full search" methods require a huge amount of memory to work properly. By "huge", i mean something in the range of 10x (with the exception of Hash Chains, but they are too slow for long distance searches anyway). So you want to search within a 256MB dictionary ? You need more than 2.5GB of memory. Farewell 32 bits systems.

One has to wonder : is it the right method ? Such amount of memory is simply prohibitive. Maybe by accepting a "less perfect" search but using less memory, we may nonetheless achieve a better compression rate thanks to the use of longer search distances. This is a position defended by Bulat Ziganshin, for its compressor FreeArc. For this scenario, LZRW comes as an obvious candidate : you can for example setup a 256MB search table, and use it to look into a 1GB dictionary. Now, long distance searches look affordable !

OK, so LZRW works, but there is no miracle : the longer the search, the more time it costs. In the end, the return on investment can be quite low, and with large number of searches (say for example 256), the search becomes so slow as becoming unpractical.

This is to be expected, all that is guaranteed by the table is that all elements share the same row, hence the same Hash Value. But that's it. So after finding an element with minmatch common bytes, we'll test another, and another, and so on. This is wasteful. What we want after finding minmatch bytes is to find another position with at least minmatch+1 common bytes.

In order to avoid testing each and every position, several methods are possible. One is to build a Binary Tree on top of the row. It is very efficient. A particularly good implementation of this idea was made by Christian Martelock for its RZM compressor (now discontinued). Unfortunately, it also means even more memory, consumed for the BT structure. And in the end, it is not so much different from a full BT structure over the entire search window, except that we lose full search capability.

OK i'm looking for something simpler. After checking for a minmatch candidate, i want to look for a minmatch+1 one, straight away. No search nor wandering.

This can be done quite simply : just hash the "minmatch+1" sequence, and store its position directly into an hash Table.

OK, so there are several tables you say, one per sequence size ?

Well, why ? No, a single one.

Just share the Hash Table among all the sequences. The simple idea here, is that a given position should be stored only once in the table, either with the hash of its "minmatch" first bytes, or "minmatch +1", or "minmatch+2", well whatever.

So, which sequence size should be stored ? Well, just start with the "minmatch" one. When a new equivalent sequence gets into the same table cell, we just move the old position at its "minmatch+1" hash, replacing its previous slot with the new position. And next time a "minmatch+1" sequence is found, we move the old sequence to "minmatch+2" and so on. So the position will move into the table, up to the point where it is considered "dead", either off limit, or because of elimination rule.

We obviously have to take into consideration the natural occurrence of collisions into this search structure. And it can happen between any sequence size. For example, a newer "minmatch" sequence could be willing to occupy the same slot as a different "minmatch+2" sequence. Well, one has to move on, or be eliminated.

That's where different rules can be applied. The most basic one is that the youngest position always win. It's good enough, and i recommend it. But it can also be mixed with some more complex heuristic, to avoid losing long-distance anchors too fast. Keeping in mind that this structure is created precisely to afford a partial search over a long distance, when there is not enough memory to keep one entry per position.

So we've got our basic idea here, a cross-over between cuckoo hashing and progressively longer hashed sequence. Hence the proposed name, Progressive Hash Series.

Now is time to put this idea into practice. I've been creating a simple long-range match finder based on greedy matching strategy. It's a simple and fast way to compare above methods. The file tested is enwik9, used into Matt's Mahoney LTCB. This file is very redundant, and particularly intensive for the match finder. LZRW and PHS are compared on their capacity to find longer matches (which translates into better compression), and on their respective speeds. Here are the results :

So what do we learn from these figures ?

First, PHS looks more efficient than LZRW. For an identical amount of memory, it converges faster towards optimal match length. This is particularly telling for the 4-attempts version, which is about has efficient as a 80-attempts LZRW.

Note however that for a given number of attempts, PHS is more complex, and requires more time. For example, the 4-attempts PHS is approximately the same speed as a 14-attempts LZRW. So, in the end, we've got the compression power of a 80-attempts LZRW for the cost of a 14-attempts one. It is still a gain.

This is my first review of this new method, and i guess we have only scratched the surface of it. Further refinements are to be expected ahead.

I have not found yet this idea described somewhere on the net. It is not even mentioned in the thorough Match Finder review by Charles Bloom. So, maybe, after all, this idea might be new.

[Edit] As specified by Charles Bloom, merging several hashes of different lengthes into the same table is not new (see comments). However, the update mechanism presented here might be.

Sunday, October 2, 2011

LZ4 in commercial application

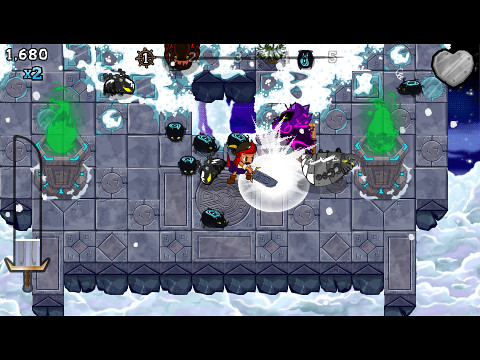

Time for great news. Although i've been informed of a few open-source projects or commercial application working to integrate LZ4 into their production, this is actually the first time that a company has completed a product with it. A product that you can buy by the way. Moreover, it's kind enough to tell publicly about it.

LZ4 is in-use within a video game called 1000 Tiny Claws, by Mediatonic. The company is young but certainly not new, and has already received several good mentions for some of its previous productions, namely "Who's that Flying", and "Monsters (probably) stole my princess" (i really love that title :). Therefore their new game stirs a lot of expectation. Let's wish them great success with their new sky-pirates adventures !

Within the game, LZ4 is used mostly to decompress resources. These are many sprites, background tiles, animations and graphics in "cartoon style". Resources are not limited to graphics, and some text files, profiles and models may also join the fray. But typically graphics tend to inflate memory budget quite much faster than the others.

For this use case, LZ4 can be useful thanks to its very fast decoding speed, making the decoding not just painless, but advantageous (i.e. faster !) compared to using resource in plain uncompressed mode. The decoder is also memory-less, which also helps.

The compression part of LZ4 is not used within the game, but obviously, it has been necessary to compress resources before injecting them into the game. For this use, the high compression version LZ4-HC has been put to the task. Not only does it compress 20-30% better, saving space and bandwidth, it also makes the compressed data even easier to decode. So this is all gains for this use case.

and of course, the all-important credit line...

1000TC engine is work from Gabor Dorka

Note that, at the time the game was created, LZ4-HC was GPL, but there is no problem with that, since LZ4-HC is not shipped within the game. Only the decoder is, which is BSD, and incurs no restriction.

The game is scheduled October 4th, on the PSN. Now is time to frag some monsters...

Tuesday, September 20, 2011

Cost of accessing variables

I finally found a way to make the -c1 compression mode of LZ4 compatible with multi-threading.

This mode comes from the earlier LZ4-HC (v0.9) branch, which was created long ago, back in these days when none of my algorithms was multi-thread ready. The only objective was performance, and single thread execution. Heavy use of global variables were part of the deal to reach better speed.

This lesson proved right once again.

The first stage of enabling multi-threading is to get rid of all global and static variables. They simply cannot be shared by several threads. At least not easily.

To do this, you simply encapsulate all necessary variables in a container (a pointer towards a structure), and then pass the container as a parameter of the function. This way, each thread has its own set of data to handle.

From a code perspective, the changes are very little : just make all prior variables points towards their equivalent in the structure, and there you go. This way, it is surprisingly fast and simple to make a code multi-thread compatible.

But there was a catch : speed instantly dropped by 20%. This is a lot, and can be easily measured with benchmark tools.

In a bid to solve this issue, i went back once again at the "global variable" effect. The code remained identical, so the change from Global Variable to Container was the only reason for the 20% difference drop. Why ? This is the complex part.

In fact, when you call a variable, using for example :

mytable[number] = 1;

the compiler will generate very different ASM codes depending on the declaration of mytable.

If mytable is declared as a global or static variable, the compiler knows exactly where stands the beginning of mytable in the heap. Then it knows the size of the cells. Therefore, it only needs to generate :

mytable-baseAddress + number x mytable-cellSize

to reach the proper memory address.

Now, if mytable is passed as a parameter within a container, it is all different. The first thing to do is to create a pointer named mytable and which points towards the beginning of the table within the container.

Now there are 2 possible situations :

1) mytable base Address is stored into a function variable (temporary one, into the stack). It needs to be read to collect mytable -baseAddress, and only then can the addition with number x mytable -cellSize happen. table-cellSize can be deduced from the pointer type.

This generates one more read for each call.

2) the compiler remembers the association. Therefore, mytable will not be stored as a variable into the stack, but dynamically translated into container-baseAddress + table-baseDelta.

I doubt this is any better than the previous proposition.

In order to avoid such a situation, one solution is to declare some of these variables as local ones. They will be created into the stack. Then, the access cost is almost similar to global/static variables : a local variable is accessed as base local stack address plus known delta. This is one more addition operation than for global/static variables, but that's ok, it is almost the same performance.

(Note : a post was created discussing this effect earlier this year : http://fastcompression.blogspot.com/2011/03/multi-threaded-entropy-experiment-huff0.html)

The real issue is that you can't create too much data into the stack. Its size is limited.

So, for larger data sets, i see no other way than to allocate the necessary memory (using malloc()) and access it with a pointer. This is bound to cost some performance compared with global/static variable, since there is one more pointer read per call, but i have yet to find a way around it.

Anyway, with a good mixture of local variables and allocated ones, the speed loss of -c1 mode was limited to 6% in single thread scenario. Not yet invisible, but still a noticeable improvement compared to previous situation. And at least, now, the code scales almost linearly with the number of CPU cores available.

You can grab the latest LZ4 version (v1.1) at its homepage.

[Edit] thanks to Richard Sim for correcting the heap/stack confusion.

This mode comes from the earlier LZ4-HC (v0.9) branch, which was created long ago, back in these days when none of my algorithms was multi-thread ready. The only objective was performance, and single thread execution. Heavy use of global variables were part of the deal to reach better speed.

This lesson proved right once again.

The first stage of enabling multi-threading is to get rid of all global and static variables. They simply cannot be shared by several threads. At least not easily.

To do this, you simply encapsulate all necessary variables in a container (a pointer towards a structure), and then pass the container as a parameter of the function. This way, each thread has its own set of data to handle.

From a code perspective, the changes are very little : just make all prior variables points towards their equivalent in the structure, and there you go. This way, it is surprisingly fast and simple to make a code multi-thread compatible.

But there was a catch : speed instantly dropped by 20%. This is a lot, and can be easily measured with benchmark tools.

In a bid to solve this issue, i went back once again at the "global variable" effect. The code remained identical, so the change from Global Variable to Container was the only reason for the 20% difference drop. Why ? This is the complex part.

In fact, when you call a variable, using for example :

mytable[number] = 1;

the compiler will generate very different ASM codes depending on the declaration of mytable.

If mytable is declared as a global or static variable, the compiler knows exactly where stands the beginning of mytable in the heap. Then it knows the size of the cells. Therefore, it only needs to generate :

mytable-baseAddress + number x mytable-cellSize

to reach the proper memory address.

Now, if mytable is passed as a parameter within a container, it is all different. The first thing to do is to create a pointer named mytable and which points towards the beginning of the table within the container.

Now there are 2 possible situations :

1) mytable base Address is stored into a function variable (temporary one, into the stack). It needs to be read to collect mytable -baseAddress, and only then can the addition with number x mytable -cellSize happen. table-cellSize can be deduced from the pointer type.

This generates one more read for each call.

2) the compiler remembers the association. Therefore, mytable will not be stored as a variable into the stack, but dynamically translated into container-baseAddress + table-baseDelta.

I doubt this is any better than the previous proposition.

In order to avoid such a situation, one solution is to declare some of these variables as local ones. They will be created into the stack. Then, the access cost is almost similar to global/static variables : a local variable is accessed as base local stack address plus known delta. This is one more addition operation than for global/static variables, but that's ok, it is almost the same performance.

(Note : a post was created discussing this effect earlier this year : http://fastcompression.blogspot.com/2011/03/multi-threaded-entropy-experiment-huff0.html)

The real issue is that you can't create too much data into the stack. Its size is limited.

So, for larger data sets, i see no other way than to allocate the necessary memory (using malloc()) and access it with a pointer. This is bound to cost some performance compared with global/static variable, since there is one more pointer read per call, but i have yet to find a way around it.

Anyway, with a good mixture of local variables and allocated ones, the speed loss of -c1 mode was limited to 6% in single thread scenario. Not yet invisible, but still a noticeable improvement compared to previous situation. And at least, now, the code scales almost linearly with the number of CPU cores available.

You can grab the latest LZ4 version (v1.1) at its homepage.

[Edit] thanks to Richard Sim for correcting the heap/stack confusion.

Monday, September 19, 2011

LZ4 reaches v1.0

Finally, a long planned update of LZ4 is out today, reaching v1.0. It's time to integrate all the various compression versions into a single binary, with the user able to select the compression level it wants by a simple switch (-c0, -c1 or -c2).

It is also time to integrate newer versions of the algorithm, since there were quite many improvements since latest release. As a consequence, v1.0 is faster and more efficient, as can be seen in this table :

If you are using the open-source version of LZ4 or LZ4-HC, it is recommended to update to latest source release.

You can grab this latest win32 binary at the LZ4 Homepage.

It is also time to integrate newer versions of the algorithm, since there were quite many improvements since latest release. As a consequence, v1.0 is faster and more efficient, as can be seen in this table :

| v0.9 | v1.0 | |

| compression ratio | 2.061 | 2.075 |

| compression speed | 260 MB/s | 295 MB/s |

| decompression speed | 810 MB/s | 990 MB/s |

| memory usage | 33MB | 17MB |

You can grab this latest win32 binary at the LZ4 Homepage.

Saturday, September 3, 2011

LZ4-HC : High Compression LZ4 version is now Open Sourced

It's been quite a while since my last post in this blog. Well, with Summer ongoing, i was busy having a life. You know, things that happen outside...

Anyway, with September ringing in, it's time to get back to work.

I've used my first few days at complying to a long standing request : wtf, where is LZ4-HC ?

Well, as i replied a few times, LZ4-HC was not provided; that is, up to now.

LZ4-HC is now Open Sourced, and provided as a sample demo for the MMC Search Algorithm, which it makes heavy use of.

That's the main difference with regular LZ4 : the HC version makes a full search, in contrast with the fast scan of the "regular" LZ4. It results in a compression ratio typically improved by 20%. But the speed is also quite impacted : the HC version is between 6x and 10x slower than the Fast one.

Nonetheless, the compression advantage can be made worthwhile, especially in "offline compression" scenario, when the time necessary to compress a resource is not important, since data can only get decompressed during execution.

There is also a second difference : since MMC is GPL, therefore LZ4-HC is GPL too. Note that it does not change anything regarding LZ4 license, which remains BSD.

Is that an issue ? Not necessarily. Since LZ4-HC and LZ4 use the very same format (and indeed, the decoding function is one and the same), so you can provide the output of LZ4-HC to LZ4, and the other way round.

Therefore, the following scenario is valid : you can use LZ4-HC to compress your data, and ship your commercial product with the compressed data streams and the (BSD) LZ4 decoder. There is no problem with that.

You can also create your own private application using LZ4-HC to compress your resources, without ever disclosing your source code. This is valid as long as the binary is not distributed.

Only if you want to distribute your application will you need to comply to the GPL License to integrate the LZ4-HC compression routine. Nothing unusual here : either open-source your code or acquire a commercial license.

Hopefully, although legality is a boring thing, it's not too complex to understand for a change.

You can grab the LZ4-HC Source Code here; it's been compiled and tested successfully under Windows, Linux 32 bits and Linux 64 bits.

[Edit] : LZ4HC is now Hosted at its own web page : http://code.google.com/p/lz4hc/. The latest version also improves compression ratio by a few %.

Anyway, with September ringing in, it's time to get back to work.

I've used my first few days at complying to a long standing request : wtf, where is LZ4-HC ?

Well, as i replied a few times, LZ4-HC was not provided; that is, up to now.

LZ4-HC is now Open Sourced, and provided as a sample demo for the MMC Search Algorithm, which it makes heavy use of.

That's the main difference with regular LZ4 : the HC version makes a full search, in contrast with the fast scan of the "regular" LZ4. It results in a compression ratio typically improved by 20%. But the speed is also quite impacted : the HC version is between 6x and 10x slower than the Fast one.

Nonetheless, the compression advantage can be made worthwhile, especially in "offline compression" scenario, when the time necessary to compress a resource is not important, since data can only get decompressed during execution.

There is also a second difference : since MMC is GPL, therefore LZ4-HC is GPL too. Note that it does not change anything regarding LZ4 license, which remains BSD.

Is that an issue ? Not necessarily. Since LZ4-HC and LZ4 use the very same format (and indeed, the decoding function is one and the same), so you can provide the output of LZ4-HC to LZ4, and the other way round.

Therefore, the following scenario is valid : you can use LZ4-HC to compress your data, and ship your commercial product with the compressed data streams and the (BSD) LZ4 decoder. There is no problem with that.

You can also create your own private application using LZ4-HC to compress your resources, without ever disclosing your source code. This is valid as long as the binary is not distributed.

Only if you want to distribute your application will you need to comply to the GPL License to integrate the LZ4-HC compression routine. Nothing unusual here : either open-source your code or acquire a commercial license.

Hopefully, although legality is a boring thing, it's not too complex to understand for a change.

You can grab the LZ4-HC Source Code here; it's been compiled and tested successfully under Windows, Linux 32 bits and Linux 64 bits.

[Edit] : LZ4HC is now Hosted at its own web page : http://code.google.com/p/lz4hc/. The latest version also improves compression ratio by a few %.

Monday, June 6, 2011

LZ4 - Improved performance

Since Przemyslaw Skibinski provided a benchmark dedicated to comparing fast compressors, i've been interested in a direct comparison between LZ4 and Google's snappy.

Snappy is a very fast compressor, but its source code is quite more complex than LZ4. At first, it may look like this complexity will cost quite some CPU cycles, but this is not matched by the results : snappy proves to be a very speedy competitor.

Therefore, I've been having a closer look into LZ4, looking for some potential wasted cycles, but couldn't find a way to make the code even leaner than it already is. A few tiny % could be grabbed here and there, but the speed difference remained too large to be clearly explained by the algorithm alone.

It turns out that the difference comes mostly from a simple reason : cache policy.

LZ4 was designed with L2 cache limitation in mind. In order to ensure fast compression, all data should be retrieved from the L2 cache, which is relatively fast, at about 10 cycles per access.

Fair enough, but there is even faster : the L1 cache can provide access within 3 cycles or less. It looks like a small difference, but it does add up with each data access. In the end, the total speed difference can reach 20%, exactly what's missing to LZ4 to reach snappy's speed.

And ... that's all it takes. Modifying the performance parameter "HASH_LOG" to keep most data accesses within the L1 cache proved enough to cover the difference.

Therefore, starting from r10 revision, the default performance parameter is 12, for 16KB, which is just the right amount for Intel L1 data cache. AMD users may try 13 for 32KB, since their L1 data cache is twice bigger.

Addendum : note that the above results are correct only for 32 bits systems. 64 bits ones will spend twice more memory.

Addendum : note that the above results are correct only for 32 bits systems. 64 bits ones will spend twice more memory.

There is just a little catch : with less memory to fill its tables, LZ4 miss more opportunities to find matches, translating into worsened compression ratio. I've partly mitigated the effect with a more thorough search, but there is still a measurable compression ratio deficit. In any case, if the compression ratio is more important for you, just increase "HASH_LOG" value to were it was before (17, for 512KB). And if ratio is really very important, move away from fast scan, and start implementing a more intensive search routine.

LZ4 was not created with such memory requirements in mind, but it's refreshing to see this algorithm accommodate these new conditions so easily.

Another interesting side effect of using less memory for compression is faster decompression. At first, it seems counter intuitive, since the decompression algorithm is not affected by the change, and does not need any memory at all beyond input and output buffers. But a reason can be found : with less matches, the decompression algorithm will have less "segments" to copy, and its speed is tied to this number : better have a few large copy operations than many small ones.

Well, if you want to give it a try, just download the latest source code version from Google code. Typical speed gain is about 20% for compression and 5% for decompression, while ratio is typically 2% less, although obviously your mileage will vary depending on the file to be compressed.

Update : well, another update (r11), another performance improvement. This time, it targets GCC compiler optimization and benefits greatly to decompression speed.

Update 2 : a compression benchmark comparison has been completed by m^2 on his Atom-based system.

Update : well, another update (r11), another performance improvement. This time, it targets GCC compiler optimization and benefits greatly to decompression speed.

Update 2 : a compression benchmark comparison has been completed by m^2 on his Atom-based system.

Saturday, June 4, 2011

LZ4 for Internet communications

Among the different comments and contributions received for LZ4, an interesting one is about using LZ4 in a communication scenario.

The idea is pretty simple to describe : sent data is compressed on the fly, and decompressed at destination point. Fair enough. LZ4 requires little resource, especially on the decoding side, so even very tiny communicating devices can accommodate such scenario.

However, this use-case comes with new threats of its own : what if, the packet containing compressed data was in fact maliciously crafted in an attempt to create a buffer overflow scenario ?

Indeed. Internet is a dangerous place, and security comes to mind when using it. The easy answer would be "strengthen your encryption and pray that no one will break it", but that's too cheap. The main point here is to make sure that the LZ4 decoder never tries to write beyond the provided destination buffer. After all, not all malformed packets are malicious attacks, it could simply be a transmission error.

This is proposed in a new decoding function, now available at Google Code :

int LZ4_uncompress(char* source, char* dest, int osize);

I've used this opportunity to also correct a long time comment about compress and decode being too different from each other, so now it is compress / uncompress.

LZ4_decode is still available, for compatibility reason, but flagged as "deprecated".

The main difference here is on the last parameter : it is osize, for "output size" (as opposed to isize within LZ4_decode). Yes, the decoder needs to know how many bytes it has to write, to ensure it never passes beyond the border.

The other difference is the result : when everything is right, LZ4_uncompress will provide the read input size. On the other hand, if the compressed stream has tried to write beyond the output buffer, indicating a malformed stream, the result will be negative to trigger an error, and the value will be the byte position of the wrong instruction.

As a side-bonus, LZ4_uncompress never writes beyond the destination size (as opposed to LZ4_decode which could write up to 3 bytes beyond the limit even in normal situations).

But the real good news is this : in spite of the extra checking, the decoder speed is barely affected. At about a couple of %, this is well within tolerance limits, and the improved safety and memory protection is really worth the change. The main reason is that the extra checks have been successfully nested, so they are not triggered in the "main loop".

Since the stream compression format remains unchanged, it is 100% compatible with already compressed data. As a consequence, this is a recommended upgrade to LZ4 users, LZ4_decode being still available for compatibility and performance mode.

Obviously, the file compression program needs to evolve.