It's a few monthes since the initial release of xxHash. The algorithm has almost fullfilled its initial objective, which is to provide a Hash function for error detection fast enough to use within LZ4 streaming interface.

Although the "fundamentals" were fine, a few details were still rough on the edges. The most important "missing feature" was the ability to provide input data in several consecutive blocks. When hashing a large file for example, the allocated buffer might not be large enough to store the whole input within a single block.

In order to accomodate this need, a new version of xxHash has been created, which is BSD licensed.

The way it works is by dividing the job into 3 parts :

XXH32_init() creates the context structure in which intermediate results will be stored.

This structure must be passed as an argument of function XXH32_feed(), which is used to provide input in several consecutive blocks. Any number of blocks is possible, there is no limit.

When all data has been provided, it's time to retrieve the result, using XXH32_result(). This function also takes care of de-allocating the context structure.

A "single pass" function is also provided, both for simplicity (a simple function call is enough) and for performance. The latter is important if the amount of data to hash is small (<100 bytes, also called "small keys"). In this case, since there is no intermediate structure to allocate & maintain, the savings are significant.

To simplify usage and interoperability, there is now a single xxHash version, which is "strong" (meaning it successfully pass all tests from SMHasher test suite). This is possible because the new version is also faster (5.4GB/s on my Core 2 Duo, to be compared with 4.2GB for the older one). The speed difference does no longer justify a "fast" version with lessened distribution guarantee.

The framework is also more extensible, meaning that versions for 64-bits, 128-bits and 256-bits can appear in the future. But for the time being, the focus is really on the 32-bits version. It's designed to be very fast on all kind of 32-bits CPU, including embedded ones (such as ARM), with still the objective to become a companion error checker for LZ4 streaming.

Wednesday, December 12, 2012

Tuesday, July 3, 2012

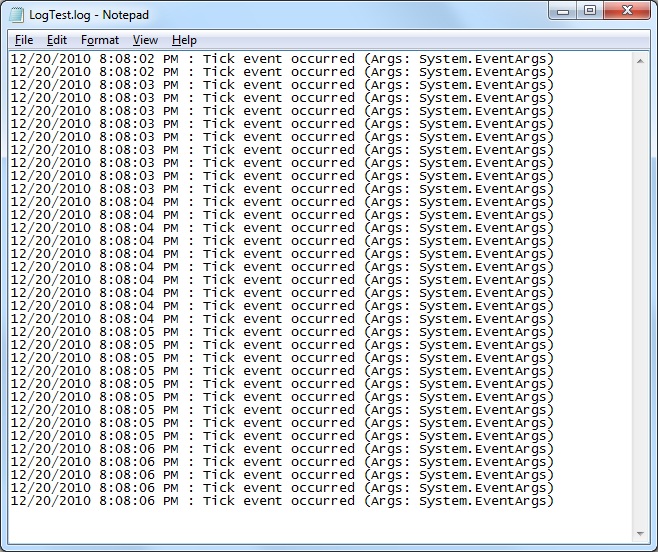

Log file compression

Although i'm currently on holliday, with limited access to the world wide web,

i would like to link here an interesting contribution from Vitor Oliveira, an LZ4 user, which seems to have found some pretty impressive results for log file compression by using a simple technique :

multi-pass compression.

More specifically, his method, which involves several pass of LZ4 algorithms, seems to produce compressed file which are several times smaller than zlib, while requiring only a fraction of the computation cost.

Some preliminary results :

zlib (one pass) : 54 MB, 265ms

LZ4 (one pass) : 56 MB, 6ms

LZ4 (multi-pass) : 4 MB, 16 ms

Since log file compression is a relatively common scenario, i figure this was interesting to share :

https://groups.google.com/d/msg/lz4c/DcN5SgFywwk/AVMOPri0O3gJ

i would like to link here an interesting contribution from Vitor Oliveira, an LZ4 user, which seems to have found some pretty impressive results for log file compression by using a simple technique :

multi-pass compression.

More specifically, his method, which involves several pass of LZ4 algorithms, seems to produce compressed file which are several times smaller than zlib, while requiring only a fraction of the computation cost.

Some preliminary results :

zlib (one pass) : 54 MB, 265ms

LZ4 (one pass) : 56 MB, 6ms

LZ4 (multi-pass) : 4 MB, 16 ms

Since log file compression is a relatively common scenario, i figure this was interesting to share :

https://groups.google.com/d/msg/lz4c/DcN5SgFywwk/AVMOPri0O3gJ

Wednesday, May 30, 2012

Compressed data transmission

If there is a situation where data is inherently short-lived, it is communication. Data starts its live on the sender side, and end it on the receiving side, a few milliseconds later.

Or does it ? Sometimes, data comes from a file into a local storage, or can be stored at the receiving side. In such case, data is merely "traveling", but is not "short-lived".

Does it make any difference ? In fact, yes, it does.

When it comes to sending a file content, this data can be "prepared" in advance. Which means it can be compressed ahead of sending it. Very strong (asymmetric) algorithms can be used for compression, as long as decoding remains "fast enough" to cope with data speed. This leads to major bandwidth reduction, and therefore improve cost and perceived transmission speed.

When it comes to sending "short-lived" data, it means this data did not exist before being produced, and the sole purpose of this data existence is to be sent, and (generally) consumed on receiving end. There is no way to "prepare" such data in advance, it must be compressed "on the fly", which means "fast".

But there is another terrible side effect : compression performance primarily comes from its capacity to "learn patterns", and re-apply them in an optimal way. Which means, for compression to be effective, a minimum of "historic data" must have already been processed for the next data to be properly compressed. With a file content, the history can be the entire file itself, which could mean a lot of megabytes, and therefore excellent perspectives for compression.

The situation is much more severe when data is generated and compressed "on the fly" : maybe the message to be sent is only a few tens of bytes long. How to compress such a thing ?

Let's study this use case.

A first "naive" implementation would simply create a message, make a packet out of it, compress it and then send it.

This implementation is unlikely to bring tangible benefits, since IP packets are typically small, trying to match MTU in order to avoid fragmentation side-effects.

A second, more compression-friendly, implementation, could try to amass enough information before starting to compress it, and then send the compressed data using as many packets as necessary.

This will certainly bring better compression performance, but introduces another problem, latency. Waiting for "enough data to be compressed" can lead to unacceptable delays.

For example, in real-time games, player command must be sent basically a.s.a.p.

As another use case, some systems may generate little data (a temperature probe for example), separated by long cycle duration.

Therefore, waiting for "enough data" is not a valid strategy in such circumstances.

A third, more complex, strategy, would use all past transmitted data as a kind of "dictionary", to help compress the next packet to come.

This basically requires the dictionary to remain "synchronized" at both end, sender and receiver. This is achievable in an "ideal" environment (no loss, no error), which is quite common in fact when using TCP transmission.

So, to sum up, we have some freshly generated data to send, of any size but typically small (a few hundreds of bytes), and we want to use all previously transmitted data as dictionary to improve compression, which requires some kind of "memory" at both sender and receiver end.

This looks possible.

In fact, this is a direct usage of "variable block sizes" concept which i expressly ruled out as "not useful" in an earlier blog note :). Now seems a good time to consider it again...

Such implementation would however require some new functions, able to re-use and save some "history data", instead of starting from freshly clean tables. This will require quite some work to achieve.

As a side effect of such methodology, it also means that such compressed packet are not compatible with stateless protocols : since they depend on previously sent data, they are inherently stateful. But so are TCP sessions anyway...

Or does it ? Sometimes, data comes from a file into a local storage, or can be stored at the receiving side. In such case, data is merely "traveling", but is not "short-lived".

Does it make any difference ? In fact, yes, it does.

When it comes to sending a file content, this data can be "prepared" in advance. Which means it can be compressed ahead of sending it. Very strong (asymmetric) algorithms can be used for compression, as long as decoding remains "fast enough" to cope with data speed. This leads to major bandwidth reduction, and therefore improve cost and perceived transmission speed.

When it comes to sending "short-lived" data, it means this data did not exist before being produced, and the sole purpose of this data existence is to be sent, and (generally) consumed on receiving end. There is no way to "prepare" such data in advance, it must be compressed "on the fly", which means "fast".

But there is another terrible side effect : compression performance primarily comes from its capacity to "learn patterns", and re-apply them in an optimal way. Which means, for compression to be effective, a minimum of "historic data" must have already been processed for the next data to be properly compressed. With a file content, the history can be the entire file itself, which could mean a lot of megabytes, and therefore excellent perspectives for compression.

The situation is much more severe when data is generated and compressed "on the fly" : maybe the message to be sent is only a few tens of bytes long. How to compress such a thing ?

Let's study this use case.

A first "naive" implementation would simply create a message, make a packet out of it, compress it and then send it.

This implementation is unlikely to bring tangible benefits, since IP packets are typically small, trying to match MTU in order to avoid fragmentation side-effects.

A second, more compression-friendly, implementation, could try to amass enough information before starting to compress it, and then send the compressed data using as many packets as necessary.

This will certainly bring better compression performance, but introduces another problem, latency. Waiting for "enough data to be compressed" can lead to unacceptable delays.

For example, in real-time games, player command must be sent basically a.s.a.p.

As another use case, some systems may generate little data (a temperature probe for example), separated by long cycle duration.

Therefore, waiting for "enough data" is not a valid strategy in such circumstances.

A third, more complex, strategy, would use all past transmitted data as a kind of "dictionary", to help compress the next packet to come.

This basically requires the dictionary to remain "synchronized" at both end, sender and receiver. This is achievable in an "ideal" environment (no loss, no error), which is quite common in fact when using TCP transmission.

So, to sum up, we have some freshly generated data to send, of any size but typically small (a few hundreds of bytes), and we want to use all previously transmitted data as dictionary to improve compression, which requires some kind of "memory" at both sender and receiver end.

This looks possible.

In fact, this is a direct usage of "variable block sizes" concept which i expressly ruled out as "not useful" in an earlier blog note :). Now seems a good time to consider it again...

Such implementation would however require some new functions, able to re-use and save some "history data", instead of starting from freshly clean tables. This will require quite some work to achieve.

As a side effect of such methodology, it also means that such compressed packet are not compatible with stateless protocols : since they depend on previously sent data, they are inherently stateful. But so are TCP sessions anyway...

Monday, May 28, 2012

Members properties

After spending some time on expected properties at streaming level, let's now get to the core of the objective, regarding the compressed data parameters.

As stated previously, a compressed stream consists of several members, the most important ones being compressed data sets. Each member starts with a header, in order to identify its content. And each header starts with a magic number, a kind of 'ID tag'.

We'll focus here on "LZ4 compressed data set". The stream design above allows adding any future compression algorithm at a later stage.

And let's take as an example the old legacy framing format, defined into lz4demo.

1) There is a magic number, which is 0x184C2102,in little endian format.

2) There are no explicit parameters. In fact, all parameters are implicit.

They are :

- The compressed data set is cut into blocks of 8MB

- Each block starts with a field giving its size (therefore, the compressed size)

- Blocks are independent

- The original data size is not stored. It will be known on decoding completion

- There is no checksum

Well, even with such limitations, the format nonetheless works perfectly fine. It's just a little too restricted to become a "generic format", and therefore, the objective of the specification is to provide more room for parameters selections.

We have already established in previous blog posts that allowing checksum for Error detection is an important selectable feature.

Another important one is the ability to select block size, since they directly control the amount of memory buffers necessary at decoding side.

Let's now study and establish potential needs for a few other properties :

- Source data size

The original size of source data is not an absolute necessity : it's always possible to decode without it, as long as buffer sizes are properly described.

But it is nonetheless useful. For example, thanks to this information, the number of blocks within the current member can be calculated beforehand. Moreover the amount of data to decode from the last block is known.

Or, if there is a single block, the exact amount of memory can be allocated, instead of the block maximum size.

It is also useful to display the processing position (yep, we decoded 80MB, but does that represent 10% or 90% of the stream to decode ?)

However, there are also circumstances in which this data is not known. For example, if the input was piped to the compressing process, then the size will be known only on hitting its end. This might be too late to "retrofit" the output.

Another situation is when several compressed data sets are appended into a single stream : then the "source data size" field only applies to the current data set, but the total size is not known.

Therefore, since it is useful but not compulsory, this information shall be present, but as an option only.

- Uncompressed blocks

A simple but important feature, since it avoids the bandwidth overhead and CPU consumption of the compression format when it's useless.

This could be done very cheaply, by accepting that, if the size of the compressed block is the same as the defined one, then it's necessarily uncompressed.

This suggestion looks simple enough for most blocks, except for the last one, which size is unknown (but capped).

Therefore, it would be necessary to know the size of the last block to compare it to the compressed one, and therefore determine if the block is compressed or not.

Another idea would be : let's give up this complexity, the last block is always compressed, even if compression is either useless or detrimental.

Actually, it's not a good idea to "not handle the last block", since there is a disastrous corner case : supposed that the compressed size of the last block is exactly the size of an uncompressed full block : then the decoding will assume it's uncompressed, leading to data corruption.

This corner case can be avoided by enforcing a simple rule : a compressed block is necessary smaller than original size. Therefore, as the last block has a size <= block size, its compressed size is necessarily < block size. Hence, if the size of this last block is the maximum size, then we are in the specific but valid corner case where the last block size is exactly the maximum size of a block, and is not compressible.

OK, enough of corner cases, let's now be in the normal situation where the last block size is a fraction of the maximum block size. How could we know it is uncompressed ?

This problem could be mitigated by inserting an information to know that we are dealing with the last block. For example, knowing the original size of the source data is enough for this need.

But it's not always available. As said previously, this is just an option, since in some streaming mode, this information is unknown. Therefore we need another indicator.

It could be something as simple as a bit, which simply tells that there is another block to follow, and as a consequence, the current block is full sized. As a bonus, this mechanism also protects against "silent block truncation" (when the compressed stream is cut exactly at the border between 2 blocks).

On reaching the last block, we need another piece of information, either the uncompressed size of the block, or if the block is compressed. The latter seems more compact.

- Zero-filled blocks

This idea was actually proposed by Charles Bloom : it's not rare, for a section of input data, to be filled with zeros.

The idea would be to "mark" such blocks with a specific prefix, such as "0".

For such situation to have reasonable chances to happen, the block size must be small enough. For example, this will probably almost never happen with lz4demo block size (8MB), while this is going to be much more frequent with very small blocks, such as 4KB ones.

- Error correction

While error detection has been much talked about, nothing has been said up to now about error correction.

That's both because this feature is much more complex to provide and of questionable usefulness.

Error correction is mostly useful in situations when there is no way to "resend" erroneous data. This applies to real-time codec (such as voice or video) and stored archive.

The difficulty in both cases is that erroneous data tends to be "bursty". For example, when a storage sector fails, we don't lose just a few bytes, but an entire sector size, typically 4KB. Same for transmission, where the most common error is a missing packet.

Dealing with large burst of errors requires some specific techniques, which unfortunately cost much processing power and memory. As a consequence, the CPU and memory budget for error correction is way beyond LZ4 one, which makes the association a questionable choice.

Therefore, it seems this feature is not expected to be "generic enough" to reserve a place into the generic framing format specification. Obviously, forking is always possible, and even recommended, to support specific features.

- Allow multi-threaded compression and decompression

Multi-threaded compression is easily achievable thanks to the division of input data into "blocks".

Multi-threaded decoding is also possible if those blocks are "independent".

Both mode shall be possible, and selectable

- Variable block sizes

This one is tricky : up to now, we have been talking about "fixed size" blocks only, with only the last block of a compressed data set having an unknown (but capped) size.

The idea here would be to authorize blocks of arbitrary size, instead of fixed ones.

The benefits are two-fold : - Separate data on "natural boundaries", in order to improve compression ratio and speed

- Allow data insertion of any size

The first point is simple to argue with : such benefit only occurs with very-high ratio (and slow) compression algorithms, such as CM, which "learn" the data behavior through statistics. There is no tangible benefit in trying to do the same for LZ4.

The second benefit is more interesting, since it authorizes some flexibility in archive management.

Actually, this is mitigated by the possibility to concatenate compressed data sets (or "members") together in a single stream or file.

Inserting data could therefore be realized by cutting the initial member into 2 parts, inserting the new member, and concatenating the 3 members together.

As a consequence, it seems the format already supports such scenario, without needing variable block sizes.

- Partial extraction and Quick Jump Table

Another potential idea is that, within a member, one could need to only extract a specific portion.

It's always possible to decode the full data set and get to the required location, but sometimes this might be overkill. For example, one may need a few MB of data which happen to be a few GB away from the starting point.

However, the idea to decode just the necessary part introduces a few restrictions : - First, the input media should be seekable. It makes little sense to partially decode a piped streams, since the decoding process is likely faster than the pipe itself.

- Second, the compressed data shall be cut into independent blocks. Otherwise, it would be necessary to decode, and therefore read, all previous blocks

- Third, to avoid to decode "too much data", the blocks shall be small enough, with corresponding impact on compression ratio (the smaller the block, the lower the compression ratio).

- Fourth, since the i/o process is likely slower than LZ4 decoding, there is a benefit only if it is possible to quick-jump to the right location immediately.

This can be achieved thanks to a table at the beginning of the compressed file. Such a table can only be filled after compression, and therefore is incompatible with non-seekable output. - Fifth, such "table" mechanism at member level would be useless in members appending scenarios.

These are quite many restrictions, for the benefit of a hardly-requested feature.

So probably this capability shall be left to a dedicated framing format.

Moreover, should the input stream be seekable, it's still possible to "hop" over blocks without reading/decoding them. This is still slower than a direct jump, but still a sensible potential speed improvement.

- Error detection algorithm

As a quick follow up of selecting-checksum-algorithm, one could note that i had not specified a preferred checksum algorithm, only a preferred checksum size (32-bits).

Although at this stage i'm somewhat inclined to use xxhash-strong, due to its speed and very good distribution property, there is still a chance that the algorithm might be found unsuitable at a later stage. Therefore, some provision should be left to allow another algorithm to take over later on if need be.

Pushing the idea a bit further, one could think "let the user select its own checksum algorithm". While the idea may sound nice, it goes against the principle of interoperability, which is exactly what this framing format tries to achieve. Therefore, only clearly defined checksum algorithms shall be allowed.

I believe this post went through most foreseeable requirements for the LZ4 framing format.

So now seems a reasonable time to start a header specification.

So now seems a reasonable time to start a header specification.

Friday, May 25, 2012

Useful compressed streaming properties

The previous articles were primarily targeted at error detection and memory buffer management, which are, arguably, very important features. We'll continue digging into the properties which seem necessary to build a suitably universal compressed streaming format.

The next article will look more closely at "members" properties.

- Compressed data appending

There are some use cases in which newly created compressed data is simply appended to an existing file or stream. Some time later, the file will be decoded and/or filtered, searching for data of potential interest.

The decoder must not choke with such input. It shall simply continue the decoding, dealing with each compressed data one after another.

This feature is relatively simple to support : it is merely necessary to not assume that, after the current compressed stream, an EOF marker will necessarily happen.

The change is simple, but it also means that a single stream may host several compressed data sets. This situation is reminiscent of the well specified and documented gzip RFC 1952 :

"A gzip file consists of a series of "members" (compressed data sets)."

This is a good fit for this situation, so we'll use the same naming convention. - Authorized members

A typical compressed stream will have a single member.

The member will, necessarily, start with a header, which allows its screening as a "valid" or "invalid" input.

A simple way to achieve this selection is to start with a "magic number", which then is compared to a list. If it's not in the list, input is rejected as "invalid".

The "magic number" is enough to tell what kind of decoder is necessary, and which header parameters follow.

To ensure proper screening from invalid (noisy) input, the magic number shall be large enough for most of its values to be considered "invalid", thus reducing the perspective of "false positive" to negligible levels.

There is however a specific category that could be defined before-hand : skippable members.

Skippable members do not contain compressed data. Instead, they may, for example, contain user comments, or a description of the compressed content (for example, file properties), or an electronic signature, it is even possible to have a program suitable for a certain environment, well, whatever.

The point is that in some circumstances, these members are not strictly necessary, and may be skipped entirely to retrieve just the compressed content.

Skipping such member requires, obviously, to know its size.

Therefore, the specification shall allow : - A range of authorized "magic number values", known as skippable members

- A mandatory "member size" field

- Beyond that, any data in the member is "user-specified"

Any magic number which fall outside of the list of authorized member is considered "invalid", and stop the decoding process, flagging the input as "corrupted".

- Clear endian convention

Some CPU read data using Little Endian convention, others use Big Endian convention.

Other conventions exist too (such as PDP endian), but it's fair to say they are rare.

Anyway, the point is that, to ensure all these platforms can exchange data between themselves, a single convention shall be selected and enforced

In my view, Little Endian is the most common convention these days, with x86/x64 on one side and ARM on the other side. Together, they almost "own" the CPU market, TV game console being the main noticeable market outside of their reach.

- Compatibility with non-seekable streams

Being compatible with "streaming mode" explicitly excludes dependency on "seekable" property.

This use case targets pipes, in either input or output mode, or both.

Piped input data is provided "on the fly" by a generating process, such as tar for example. There is no way to "know its size". It will be discovered on hitting the end of the stream.

Piped output data is immediately consumed by a user process. There is no way to "come back" to an earlier position and correct or add an information there : every new information must necessarily be appended.

When both input and output are piped, it creates a set of restrictions.

Hopefully, it is not so difficult to overcome.

First, a stream doesn't work "byte by byte". It is in fact a succession of memory buffers. One of them is the compression buffer.

Knowing the size of input source data is useful in one case : when its smaller than the default block size. This is fine, since in this case, all input can be "loaded" into the compression buffer, and then compressed. Since we then write the result to the output buffer, it's possible to write there the size of input. During decoding, this size will allow allocation of just the necessary amount of memory, instead of the full block-size.

This is a useful but small benefit : we could have allocated the buffer at its maximum "block" size instead.

When input is larger than a single block (=buffer size), we have a problem, and we can't "write" the size of the input, since we don't know it yet.

Well, it's not that much of a problem. LZ4 doesn't "need" this size to decode. And the buffer is already being allocated to its maximum block size anyway.

In other words, knowing the uncompressed source size is not mandatory. We can live without.

- Enforce data alignment

LZ4 does not require any data alignment, so it does not look necessary.

The next article will look more closely at "members" properties.

Tuesday, May 15, 2012

Dealing with blocks side-effects

Continuing on the previous post analysis of the lz4demo's current framing format, another side-effect created by this simple format is "latency".

Since the fixed block size is 8MB, the codec must wait for the first block to be completely filled before starting any decoding or compressing operation. It effectively defers processing by a few microseconds.

This issue may not seem large, especially if underlying I/O is fast. Nonetheless, not all I/O are fast, and even in such cases, an 8MB "starting time" is bound to be measurably worse than a 256KB one for example.

As a consequence, a framing format with a smaller block size would offer better and smoother processing throughput.

Which leads us to a last and important issue : independent blocks.

While this strategy is good for simplicity and multi-threading, it's bad for compression : it translates into a worsened compression ratio on the first 64KB of each block.

With block sizes of 8MB, this effect is negligible (affecting compression ratio by less than 0.1%). However, the smaller the block size, the worse the side-effect. With small block sizes, this effect can no longer be neglected.

Therefore, should the blocks remain independent ?

Indeed. By making the next block depending on the previous one, it nullifies the problem of worsened compression ratio. But it also makes it impossible to decode a compressed block independently, with negative consequences on multi-threading and partial decoding capabilities.

Is that really an issue ? Well, it really depends on the use case.

In many circumstances, such as simple file compression or stream compression, it does not matter, since data is encoded and decoded sequentially anyway. Throwing away the capability to multi-thread decompression seems bad, but in fact, most of the time, I/O is unable to cope with LZ4 decoding speed (around 1GB/s). So a single decoding thread is enough to handle almost any I/O load.

Since there is little need for partial decoding, nor for multithreaded decoding, the compression ratio gain looks more useful.

There is just a little remaining problem :

While the decoding function will need few adaptation to handle this new use case, most of the complexity being located into the buffer manager, the compression function on the other hand has to be adapted.

While each block were independant, compression could start with a pristine clean reference table.

But with sequentially dependant blocks, the initialization becomes more complex : the previous 64K needs to be copied in front of the next block, and then loaded/hashed into the reference table, before starting compression. It obviously costs CPU and time.

A variant is to just "translate" the references already loaded into the table as a result of compressing the previous block, but it's limited to "single thread" scenario.

OK, but now that we can reference data from previous blocks, how far should we go ? The natural maximum distance is the "copy window size". This size is, by default, 64KB for LZ4. But it could happen that the receiving end of the compressed stream has not enough memory to store that much data. In such case, the compressor must be careful in not using references beyond the memory capacity of the receiver. In other words, it must deliberately discard long-distance copy operations.

Should such a use case be part of the generic framing format or not ?

My answer would be : it's okay, as long as an easy solution can be found.

How could that work ? Once again, let's focus on the decoder side.

I'll imagine a controller with only 4K memory available as buffer.

A simple way to handle such case is by dividing this space into 2 parts : 2K for the "previous block", and 2K for the "block to decode". So we end up with :

- Block size = 2K = memory limit / 2

- Maximum copy reference = lower limit of previous block

Obviously, there are other possibilities, such as cutting data into even small parts (for example 1K blocks) and having a back reference of up to 3 previous blocks. But as a first approximation, it seems these variant will provide almost equivalent results while being more complex.

This situation can be summarized with a simple rule : never reference data beyond one block distance.

With only this simple rule in place, it seems the default LZ4 framing format could be made compatible even with environments with severely limited RAM, provided the encoder selects a suitable block size.

Since the fixed block size is 8MB, the codec must wait for the first block to be completely filled before starting any decoding or compressing operation. It effectively defers processing by a few microseconds.

This issue may not seem large, especially if underlying I/O is fast. Nonetheless, not all I/O are fast, and even in such cases, an 8MB "starting time" is bound to be measurably worse than a 256KB one for example.

As a consequence, a framing format with a smaller block size would offer better and smoother processing throughput.

Which leads us to a last and important issue : independent blocks.

While this strategy is good for simplicity and multi-threading, it's bad for compression : it translates into a worsened compression ratio on the first 64KB of each block.

With block sizes of 8MB, this effect is negligible (affecting compression ratio by less than 0.1%). However, the smaller the block size, the worse the side-effect. With small block sizes, this effect can no longer be neglected.

Therefore, should the blocks remain independent ?

Indeed. By making the next block depending on the previous one, it nullifies the problem of worsened compression ratio. But it also makes it impossible to decode a compressed block independently, with negative consequences on multi-threading and partial decoding capabilities.

Is that really an issue ? Well, it really depends on the use case.

In many circumstances, such as simple file compression or stream compression, it does not matter, since data is encoded and decoded sequentially anyway. Throwing away the capability to multi-thread decompression seems bad, but in fact, most of the time, I/O is unable to cope with LZ4 decoding speed (around 1GB/s). So a single decoding thread is enough to handle almost any I/O load.

Since there is little need for partial decoding, nor for multithreaded decoding, the compression ratio gain looks more useful.

There is just a little remaining problem :

While the decoding function will need few adaptation to handle this new use case, most of the complexity being located into the buffer manager, the compression function on the other hand has to be adapted.

While each block were independant, compression could start with a pristine clean reference table.

But with sequentially dependant blocks, the initialization becomes more complex : the previous 64K needs to be copied in front of the next block, and then loaded/hashed into the reference table, before starting compression. It obviously costs CPU and time.

A variant is to just "translate" the references already loaded into the table as a result of compressing the previous block, but it's limited to "single thread" scenario.

OK, but now that we can reference data from previous blocks, how far should we go ? The natural maximum distance is the "copy window size". This size is, by default, 64KB for LZ4. But it could happen that the receiving end of the compressed stream has not enough memory to store that much data. In such case, the compressor must be careful in not using references beyond the memory capacity of the receiver. In other words, it must deliberately discard long-distance copy operations.

Should such a use case be part of the generic framing format or not ?

My answer would be : it's okay, as long as an easy solution can be found.

How could that work ? Once again, let's focus on the decoder side.

I'll imagine a controller with only 4K memory available as buffer.

A simple way to handle such case is by dividing this space into 2 parts : 2K for the "previous block", and 2K for the "block to decode". So we end up with :

- Block size = 2K = memory limit / 2

- Maximum copy reference = lower limit of previous block

Obviously, there are other possibilities, such as cutting data into even small parts (for example 1K blocks) and having a back reference of up to 3 previous blocks. But as a first approximation, it seems these variant will provide almost equivalent results while being more complex.

This situation can be summarized with a simple rule : never reference data beyond one block distance.

With only this simple rule in place, it seems the default LZ4 framing format could be made compatible even with environments with severely limited RAM, provided the encoder selects a suitable block size.

Monday, May 14, 2012

Memory buffer management

One of the objectives for the LZ4 framing format is to instruct the LZ4 decoder how to best handle memory to decode the compressed data.

To illustrate with a simple example, let's study the current framing format of lz4demo.

This framing has no option, and is therefore completely static. At the core of the specification is a "data split" methodology, which automatically split input data (typically files) into multiple blocks of 8MB each. That is to say, if the source data is larger than 8MB, then the first block will only hold the first 8MB of data, and the next block too, up to the point that only one block remains. This last one can have any size between 0 and 8 MB. The last block can also be the first one, if source data is < 8MB.

Moreover, each block is completely independant. Which means each block can be compressed/decompressed in any order, without dependency, except to output data blocks in the same order as input ones. This property is very interesting for multithreading.

Now, this simple scheme comes also with some problems of its own. Let's look into it.

First, since we don't know the size of the source data, the decoder has to allocate 8MB of memory, no matter what. Maybe the file is only 4KB for example ? Well, the decoder don't know it.

To be fair, knowing the maximum compression ratio (255:1), it could guess that, if the compressed size is 2KB, then the decoded size cannot be more than 512KB. But this is awkward and awful trick.

For modern computers, it seems this is a minor issue. However, in many other circumstances, such as embedded and low-power devices, this amount of memory is way too large.

To limit the size of allocated memory, a potential work-around for the decoder is to use a "sliding buffer" methodology.

For example, data would be decoded into blocks of size 256KB, a lot smaller than the full 8MB size. On reaching the end of the small block, decoded data would be written to disk, keeping only the last 64KB ones (copy window size) for reference of the next small block.

This is workable, and would save a lot of memory. But there are a few drawbacks :

As a consequence, it seems clear that there is no such thing as "one-size fits all". The size of blocks needs to be customizable, in order to fit a broader range of situations.

Additionnally, it seems better to help the decoder allocating only what's needed, especially, when it can be smaller than the default block size. Which means that, for small data source (smaller than the block size), it would be interesting to provide the original source size : this will allow the decoder to only allocate what's necessary, instead of the default maximum block size.

To illustrate with a simple example, let's study the current framing format of lz4demo.

This framing has no option, and is therefore completely static. At the core of the specification is a "data split" methodology, which automatically split input data (typically files) into multiple blocks of 8MB each. That is to say, if the source data is larger than 8MB, then the first block will only hold the first 8MB of data, and the next block too, up to the point that only one block remains. This last one can have any size between 0 and 8 MB. The last block can also be the first one, if source data is < 8MB.

Moreover, each block is completely independant. Which means each block can be compressed/decompressed in any order, without dependency, except to output data blocks in the same order as input ones. This property is very interesting for multithreading.

Now, this simple scheme comes also with some problems of its own. Let's look into it.

First, since we don't know the size of the source data, the decoder has to allocate 8MB of memory, no matter what. Maybe the file is only 4KB for example ? Well, the decoder don't know it.

To be fair, knowing the maximum compression ratio (255:1), it could guess that, if the compressed size is 2KB, then the decoded size cannot be more than 512KB. But this is awkward and awful trick.

For modern computers, it seems this is a minor issue. However, in many other circumstances, such as embedded and low-power devices, this amount of memory is way too large.

To limit the size of allocated memory, a potential work-around for the decoder is to use a "sliding buffer" methodology.

For example, data would be decoded into blocks of size 256KB, a lot smaller than the full 8MB size. On reaching the end of the small block, decoded data would be written to disk, keeping only the last 64KB ones (copy window size) for reference of the next small block.

This is workable, and would save a lot of memory. But there are a few drawbacks :

- A specific decoding function is necessary to do this, which does not exist (yet).

Such function needs to keep track of its "internal state", in order to resume decoding where it left.

Which means, for example, that we may be in the middle of match copy operation.

The "exit" and "error" conditions are also different and more numerous.

As a consequence, the code for such function will be more complex and slower. - Adding to the performance issue, the "sliding" mechanism involves repeateadly copying the last 64KB of each small block in preparation for the next one.

- Although 256KB is a lot smaller than 8MB, it's still too large for some low-memory applications.

Alas there is a limit to this methodology, which is the size of the "window copy operation" (64KB) plus a reasonable amount of data to decode, typically another 64KB.

So, the minimum decoding buffer for this methodology is 128KB.

This is better, but still too large.

As a consequence, it seems clear that there is no such thing as "one-size fits all". The size of blocks needs to be customizable, in order to fit a broader range of situations.

Additionnally, it seems better to help the decoder allocating only what's needed, especially, when it can be smaller than the default block size. Which means that, for small data source (smaller than the block size), it would be interesting to provide the original source size : this will allow the decoder to only allocate what's necessary, instead of the default maximum block size.

Monday, April 30, 2012

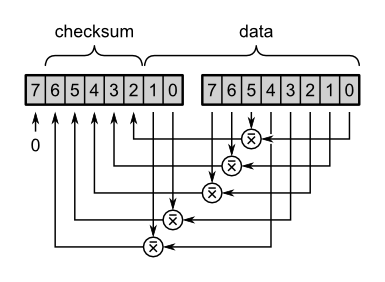

Selecting a Checksum algorithm

Following the entry regarding Error detection, it is still necessary to find a correct algorithm to do the job.

In other circumstances, the solution would have been a simple reliance on CRC32. After all, this algorithm is already in use in many applications, including modem transmission (ITU-T V42), Zip, MPEG2, etc. So it looks solid.

And solid it is, but fast it is not.

As stated by Shelwien, a fast hard-wired version of CRC32 exists, within the most recent Intel CPUs. However, not only is it a limited choice in term of target CPU, it is also a different variant (i.e. incompatible) from the normalized one. Therefore, software mode cannot be avoided.

And what's the use of a very fast compression algorithm if the checksum takes much longer to be calculated ?

To be more specific, LZ4 decoder works roughly at 1GB/s on my test machine. So, to be almost negligible, a checksum algorithm would need to be ~10x faster, which would give 10GB/s. This is obviously not possible, keeping in mind that the RAM speed doesn't cope with such situation : a memcpy() operation is limited to 2.5GB/s on the very same machine.

So OK, we can't reach the ideal situation, but what would be the best possible one, i.e. the fastest one ?

This is not an easy question, since making a fast but weak checksum with very poor properties is the usual outcome. For example, sum all your bytes one by one, and here you have your checksum ! Except that its distribution is absolutely horrible, resulting in too many potentially undetected errors.

In order to assess if a checksum algorithm features some good properties, it is necessary to test them, with an appropriate tool.

And we are lucky, such a tool exists.

Austin Appleby created MurmurHash, in a bid to provide the fastest possible hash algorithm. In order to test its own algorithm, he also created SMHasher, which is an invaluable tool to assess the performance of any hash function. As can be guessed, MurmurHash answer perfectly to all criteria, but that doesn't mean that the tool is flawed, it's more a testament that MurmurHash was developed and improved along the lines of SMHasher. That being said, the methodology is sound, and results look reliable.

I immediately used the opportunity to test different checksum algorithm provided within the package. Starting with CRC32 of course. And the results are not excellent.

CRC32 speed is 430MB/s on the test system. That's not bad, but compared to LZ4 decoder, it is more than twice slower, which means the CPU would take more time to check the data rather than actually decoding it.

Furthermore, the distribution properties of CRC32 are not "best in class". Quite far from it in fact. The bit distribution is typically "biased", which means that the probably for a bit position to be 0 or 1 is not a near-perfect ~50%. Worse, bit independence is nonexistent. It fails some tests, such as the "2-bytes keyset", and the "HighBits keyset", which has devastating consequences on bit distribution.

This doesn't make CRC32 unusable. It just shows that this older algorithm is not perfect.

More importantly, as expected, it is not fast enough for our needs.

Let's move on to another candidate, FNV, which is also part of the package.

FNV (Fowler-Noll-Vo) takes a reputation of being an extremely fast hash function. Many variants have appear around the web, with the same core principles, marrying XOR with multiplications in an inner loop.

The speed is indeed better, but at 550 MB/s, it's not that great.

Furthermore, the properties of FNV are no better than CRC32, featuring the same weaknesses, plus failing badly at "Cyclic test", having poor results on "sparse keyset", and very poor on both low and high bits keyset, etc. Well, the results are so bad, i could not advise this function to anyone.

So, let's go straight to the king of the hill. The most recent version of MurmurHash (v3.a) is said to be the fastest hash function available. We are only interested in the 32-bits version, so let's put that one on the grill.

And yes, fast it is. The speed is 2.7 GB/s. This is just huge. It's close enough to my RAM speed limit. Interestingly, the speed is depending on input being aligned on 4-bytes boundaries (32 bits). If not, the speed dips to 2.0 GB/s, which is still good.

More importantly, with this speed, we get all SMHasher properties verified, with no single trace of distribution weakness. That's great. We have a candidate !

************************************************************************************

The story could have ended here. But as usual, i couldn't resist. I would be willing to put some of my own function to the test, thanks to SMHasher.

An old "fast hash" of mine would end up with the same speed as MurmurHash, but much worse properties.

That could have been the end. After all, MurmurHash is so fast, maybe it is merely limited by RAM speed after all ?

So i made another, simpler, attempt purely targeting speed. It resulted in an 8GB/s hash function. Yep. This is much more than my RAM speed, so how could it be ?

Well, in fact, it is much more than memcpy(), but there's nothing magic : in fact, memcpy() not only read input from memory, but also write to memory. This is something the hash function does not need to do. The input is simply "sunk" into registers. So, it can be that fast, and there is definitely some room left for more speed.

I therefore spent a little more time studying what makes MurmurHash such a great hash function.

I believe there are 2 keys parts :

The first one "blends" the fed input bytes into registers, thanks to several simple operations, the most important of them being _rotl().

_rotl() (Rotate left) ensures that the bits that were previously high now becomes low. So it effectively nullify the problem of high bits having a small contribution to the overall hash distribution.

The second one digest the final blended result thanks to a serie of small steps known as "avalanche". It just destroys any kind of bit bias or correlation left that the blend would have left.

With both being combined, we get an almost perfect hash distribution.

So i started from there, re-using the above "fast simple hash" and improving it with the presented ideas. I ended up with a 7.5 GB/s hash function, which is about just Hellish.

It would pass all the tests except for a single one, "Keyset 'Sparse' - 2048-bit keys with up to 2 bits set". That's because the blending phase creates some "twin bits" far apart from each other, at varying distances depending on bit position. This is obviously bad for a crypto-hash, but a 32-bits checksum is anyway not meant for this task.

We simply want to detect errors, more probably transmission and storage failures, or manual glitches. For such cases, since twin bits are very far apart, it seems it would be extremely unlucky to have random errors happening just exactly on twin bits pattern. In fact, the probability seems lower than getting a collision from two completely different inputs on the 32-bits checksum. Which is the limit of this exercise.

Anyway, for the sake of it, i also created a stronger version of the algorithm, which add an operation in the inner loop to make the twin bit pattern vanish. This version reaches 4.2 GB/s, which is still good, but noticeably slower than the fast version. Well, that's the price to pay for stronger properties.

The final result of these investigation is called xxHash, and is now an open-sourced project, hosted on Google Code.

*****************************************************

(Update) : a new xxHash version has been published. It features the same diversity as xxHash_strong, while being faster, at 5.4 GB/s on the same platform (Core 2 Duo, 3 GHz). It also allows providing input in several consecutive calls, as opposed to a single memory block.

In other circumstances, the solution would have been a simple reliance on CRC32. After all, this algorithm is already in use in many applications, including modem transmission (ITU-T V42), Zip, MPEG2, etc. So it looks solid.

And solid it is, but fast it is not.

As stated by Shelwien, a fast hard-wired version of CRC32 exists, within the most recent Intel CPUs. However, not only is it a limited choice in term of target CPU, it is also a different variant (i.e. incompatible) from the normalized one. Therefore, software mode cannot be avoided.

And what's the use of a very fast compression algorithm if the checksum takes much longer to be calculated ?

To be more specific, LZ4 decoder works roughly at 1GB/s on my test machine. So, to be almost negligible, a checksum algorithm would need to be ~10x faster, which would give 10GB/s. This is obviously not possible, keeping in mind that the RAM speed doesn't cope with such situation : a memcpy() operation is limited to 2.5GB/s on the very same machine.

So OK, we can't reach the ideal situation, but what would be the best possible one, i.e. the fastest one ?

This is not an easy question, since making a fast but weak checksum with very poor properties is the usual outcome. For example, sum all your bytes one by one, and here you have your checksum ! Except that its distribution is absolutely horrible, resulting in too many potentially undetected errors.

In order to assess if a checksum algorithm features some good properties, it is necessary to test them, with an appropriate tool.

And we are lucky, such a tool exists.

Austin Appleby created MurmurHash, in a bid to provide the fastest possible hash algorithm. In order to test its own algorithm, he also created SMHasher, which is an invaluable tool to assess the performance of any hash function. As can be guessed, MurmurHash answer perfectly to all criteria, but that doesn't mean that the tool is flawed, it's more a testament that MurmurHash was developed and improved along the lines of SMHasher. That being said, the methodology is sound, and results look reliable.

I immediately used the opportunity to test different checksum algorithm provided within the package. Starting with CRC32 of course. And the results are not excellent.

CRC32 speed is 430MB/s on the test system. That's not bad, but compared to LZ4 decoder, it is more than twice slower, which means the CPU would take more time to check the data rather than actually decoding it.

Furthermore, the distribution properties of CRC32 are not "best in class". Quite far from it in fact. The bit distribution is typically "biased", which means that the probably for a bit position to be 0 or 1 is not a near-perfect ~50%. Worse, bit independence is nonexistent. It fails some tests, such as the "2-bytes keyset", and the "HighBits keyset", which has devastating consequences on bit distribution.

This doesn't make CRC32 unusable. It just shows that this older algorithm is not perfect.

More importantly, as expected, it is not fast enough for our needs.

Let's move on to another candidate, FNV, which is also part of the package.

FNV (Fowler-Noll-Vo) takes a reputation of being an extremely fast hash function. Many variants have appear around the web, with the same core principles, marrying XOR with multiplications in an inner loop.

The speed is indeed better, but at 550 MB/s, it's not that great.

Furthermore, the properties of FNV are no better than CRC32, featuring the same weaknesses, plus failing badly at "Cyclic test", having poor results on "sparse keyset", and very poor on both low and high bits keyset, etc. Well, the results are so bad, i could not advise this function to anyone.

So, let's go straight to the king of the hill. The most recent version of MurmurHash (v3.a) is said to be the fastest hash function available. We are only interested in the 32-bits version, so let's put that one on the grill.

And yes, fast it is. The speed is 2.7 GB/s. This is just huge. It's close enough to my RAM speed limit. Interestingly, the speed is depending on input being aligned on 4-bytes boundaries (32 bits). If not, the speed dips to 2.0 GB/s, which is still good.

More importantly, with this speed, we get all SMHasher properties verified, with no single trace of distribution weakness. That's great. We have a candidate !

************************************************************************************

The story could have ended here. But as usual, i couldn't resist. I would be willing to put some of my own function to the test, thanks to SMHasher.

An old "fast hash" of mine would end up with the same speed as MurmurHash, but much worse properties.

That could have been the end. After all, MurmurHash is so fast, maybe it is merely limited by RAM speed after all ?

So i made another, simpler, attempt purely targeting speed. It resulted in an 8GB/s hash function. Yep. This is much more than my RAM speed, so how could it be ?

Well, in fact, it is much more than memcpy(), but there's nothing magic : in fact, memcpy() not only read input from memory, but also write to memory. This is something the hash function does not need to do. The input is simply "sunk" into registers. So, it can be that fast, and there is definitely some room left for more speed.

I therefore spent a little more time studying what makes MurmurHash such a great hash function.

I believe there are 2 keys parts :

The first one "blends" the fed input bytes into registers, thanks to several simple operations, the most important of them being _rotl().

_rotl() (Rotate left) ensures that the bits that were previously high now becomes low. So it effectively nullify the problem of high bits having a small contribution to the overall hash distribution.

The second one digest the final blended result thanks to a serie of small steps known as "avalanche". It just destroys any kind of bit bias or correlation left that the blend would have left.

With both being combined, we get an almost perfect hash distribution.

So i started from there, re-using the above "fast simple hash" and improving it with the presented ideas. I ended up with a 7.5 GB/s hash function, which is about just Hellish.

It would pass all the tests except for a single one, "Keyset 'Sparse' - 2048-bit keys with up to 2 bits set". That's because the blending phase creates some "twin bits" far apart from each other, at varying distances depending on bit position. This is obviously bad for a crypto-hash, but a 32-bits checksum is anyway not meant for this task.

We simply want to detect errors, more probably transmission and storage failures, or manual glitches. For such cases, since twin bits are very far apart, it seems it would be extremely unlucky to have random errors happening just exactly on twin bits pattern. In fact, the probability seems lower than getting a collision from two completely different inputs on the 32-bits checksum. Which is the limit of this exercise.

Anyway, for the sake of it, i also created a stronger version of the algorithm, which add an operation in the inner loop to make the twin bit pattern vanish. This version reaches 4.2 GB/s, which is still good, but noticeably slower than the fast version. Well, that's the price to pay for stronger properties.

The final result of these investigation is called xxHash, and is now an open-sourced project, hosted on Google Code.

*****************************************************

(Update) : a new xxHash version has been published. It features the same diversity as xxHash_strong, while being faster, at 5.4 GB/s on the same platform (Core 2 Duo, 3 GHz). It also allows providing input in several consecutive calls, as opposed to a single memory block.

Thursday, April 12, 2012

Detecting errors

Surely there are also valid usages of LZ4 which may not need error detection and its associated CPU and bandwidth overhead, hence this feature will remain optional in the final specification, but nonetheless recommended.

This requirement is really very common. And a reasonable method to check for unintentional errors (such as transmission or storage ones) can be provided with a simple checksum. Typically, a good 32-bits checksum will make it relatively improbable (less than 1 in a billionth) that random changes stay unnoticed. This method is typically used by Zip.

One could note that the LZ4 decoder already includes some form of detection for malformed input. It's correct, but it's not enough, because it only checks that provided instructions are valid. The main objective was to forbid any attempt to read or write outside of the memory buffers. Hence, it cannot detect a corrupted literal within the compressed stream for example.

On the other side, one could also require the method to guarantee the input data never was modified, neither randomly nor intentionally (data integrity). Such objective, however, seems out of scope.

To begin with, it require an electronic signature, at least 128-bits such as MD-5. But even MD-5 is now considered too weak for this use, and shall be superseded by stronger 160-bits SHA-1 and such.

One obvious issue is speed. What's the use of a super-fast compression / decompression algorithm if the checksum function is several times slower ?

But the most important issue is that the signature is not enough. The signature's mission is to make it almost impossible to find a modification to the original source file which produces the same electronic signature. But since the electronic signature is provided within the framing format, a malicious attacker would just need to change both the content and the signature.

Therefore, an effective protection shall also require encryption, or a separate signature storage and transmission, which is way beyond the objective of this framing format.

So we'll just consider random data corruption from now on.

The next question is : what has to be checksum-ed ?

Due to the popularity of the Zip format, an obvious answer could be "the original data".

But let's look into it, it's not that obvious.

Take the snappy framing format for example. It checksums the original data of each chunk individually, with each chunk size being <= 32KB. The main advantage is that an incorrect chunk is detected immediately, there is no need to wait for the end of the stream for this result. This is especially important when the stream is very large (for example 1GB), and the consumer process wants to use the decoded data without waiting for the last chunk.

The main drawback, however, is silent truncation. Imagine for example that the last few chunks are missing. There will be no way to detect that.

Another possible answer is to checksum the compressed data instead of the original one.

This is valid. A compressed stream can only be decoded into a single result. Hence, protecting the compressed data from errors is the same as protecting the original data.

And it brings several advantages with it.

First, by checking the checksum against the compressed stream, it warns against data corruption even before attempting to decode.

Second, since compressed data is generally smaller than original one, the checksum function will be faster to execute. Both properties have positive impact on CPU consumption.

Note however that for the first property to be true, each chunk must be checksumed, not just the entire stream, because the decoding starts immediately on reading the first chunk from i/o, both to save time and memory.

OK, so considering these advantages, i'm almost sold to the method of checksuming the compressed chunks. The most important drawback i would still worry about is the silent truncation issue of snappy-frame.

It seems however this can be easily solved. One just needs to ensure that the "continuation" information is provided. And there are several possibilities to do it. For example, provide the size of the original data into the framing format, and calculate the number of (fixed size) chunks. Or embed the continuation information into a field associated with each chunk, and make it part of the checksumed data. There are surely other possibilities. The main idea is that the "end of stream" information must be provided, not just detected on hitting the EOF marker.

One last property that could be missing is using the checksum as a kind of "weak signature". For example, in the zip format, since the checksum is calculated from the original data, it is possible to "check" that the file present into the zip file is likely the expected one.

But i'm not sure if such usage has any real value, not even convenience. Has stated earlier, such "signature" is much too weak to be trusted, so it can merely be used as an "hint", not much else.

As usual comments are welcomed.

There is another point to discuss, which is the checksum algorithm itself.

We'll keep that for another blog note.

Sunday, April 8, 2012

A file container format for LZ4

With increased usage of LZ4 come new use cases, and with them new requirements.

The recent ports of LZ4 to Python and Perl are two excellent examples, demultiplying the possible usages by making the algorithm available to a broader range of applications, including easy-to-deploy scripts.

In this context, a simple question is asked : how can one be sure that a file compressed with, for example, the Python port of LZ4, will be decodable by the C# port of LZ4 ?

Which is indeed a very good question. And the sorry answer is that : there is no such guarantee. At least, not yet.

LZ4 has been developed as an in-memory compression algorithm : it takes a memory buffer as an input, and provides the compressed result into an output memory buffer. Whatever is done before or after that operation is beyond its area of responsibility. The fact that these buffers may be read, or written, to disk is just one of many possibilities.

This is a correct positioning. There are too many possible usages for LZ4, and a lot of them will never bother with on-disk files or network streaming.

Nevertheless, it happens that one natural usage of compression algorithm is file compression, and it applies to LZ4 too. In this case, the compressed result is written to a media, and may be decoded later, possibly by a completely different system. To ensure this operation will be always possible, even when using any other version of LZ4 written in any language, it is necessary to define a file container format.

To be more complete, a container format has been defined into lz4demo, but it was never meant to become a reference. It is too limited for this ambition. Therefore, a new container format specification is necessary.

The ideas in this post will be largely borrowed from this older one, where a container format was discussed and defined for Zhuff. Most of the points are still applicable for LZ4.

We'll start by defining the objectives. What are the responsibilities of a file container format ? Well, mostly, it shall ensure that the original file will be restored entirely and without errors. This can be translated into the following technical missions :

This is already quite a few missions. But most of them will be possible, as we'll see later.

Another important thing to define is what's not within this format missions :

While the file container format takes care of regenerating original content, the archive will also take care of directory structure. It will be able to save file names, and re-organize them in a better way to help compression. On decoding, it will regenerate the entire tree structure, placing each file at its correct location, with sometimes also original attributes.

An archive format therefore occupy a higher layer in the structure. And it typically depends itself on such container formats.

Last but not least, we'll make the definition of "file" a bit broader, Unix-like, in order to also encompass "pipe'd data". The "file container format" then becomes a "stream container format", with files being one of the possible streams.

All these elements can be provided in a relatively compact and efficient header.

But first, let's discuss the most important features.

[Note] Considering the comments, it seems the word "container" does not properly define the objective of this specification. Another word shall be found. "Framing format" is the current candidate.

The recent ports of LZ4 to Python and Perl are two excellent examples, demultiplying the possible usages by making the algorithm available to a broader range of applications, including easy-to-deploy scripts.

In this context, a simple question is asked : how can one be sure that a file compressed with, for example, the Python port of LZ4, will be decodable by the C# port of LZ4 ?

Which is indeed a very good question. And the sorry answer is that : there is no such guarantee. At least, not yet.

LZ4 has been developed as an in-memory compression algorithm : it takes a memory buffer as an input, and provides the compressed result into an output memory buffer. Whatever is done before or after that operation is beyond its area of responsibility. The fact that these buffers may be read, or written, to disk is just one of many possibilities.

This is a correct positioning. There are too many possible usages for LZ4, and a lot of them will never bother with on-disk files or network streaming.

Nevertheless, it happens that one natural usage of compression algorithm is file compression, and it applies to LZ4 too. In this case, the compressed result is written to a media, and may be decoded later, possibly by a completely different system. To ensure this operation will be always possible, even when using any other version of LZ4 written in any language, it is necessary to define a file container format.

To be more complete, a container format has been defined into lz4demo, but it was never meant to become a reference. It is too limited for this ambition. Therefore, a new container format specification is necessary.

The ideas in this post will be largely borrowed from this older one, where a container format was discussed and defined for Zhuff. Most of the points are still applicable for LZ4.

We'll start by defining the objectives. What are the responsibilities of a file container format ? Well, mostly, it shall ensure that the original file will be restored entirely and without errors. This can be translated into the following technical missions :

- Detect valid containers from invalid input (compulsory)

- Detect / control errors (recommended)

- Correct errors (optional)

- Help the decoder to organize its memory buffers

- buffer sizes, and number of buffers (compulsory)

- provide user control over buffer sizes (optional)

- Organize data into independent chunks for multi-threaded operations (optional)

- Allow to quick-jump to a specific data segment within the container (optional)

- Be compatible with non-seekable streams, typically pipe mode (compulsory)

- Enforce alignment rules (optional)

- Allow simplified concatenation of compressed streams (optional)

This is already quite a few missions. But most of them will be possible, as we'll see later.

Another important thing to define is what's not within this format missions :

- Save the file name (debatable)

- Save file attributes and path

- Group several files (or directory) into a single compressed stream

- Provide optional user space, typically for comments (debatable)

- Split the compressed stream into several output files

While the file container format takes care of regenerating original content, the archive will also take care of directory structure. It will be able to save file names, and re-organize them in a better way to help compression. On decoding, it will regenerate the entire tree structure, placing each file at its correct location, with sometimes also original attributes.

An archive format therefore occupy a higher layer in the structure. And it typically depends itself on such container formats.

Last but not least, we'll make the definition of "file" a bit broader, Unix-like, in order to also encompass "pipe'd data". The "file container format" then becomes a "stream container format", with files being one of the possible streams.

All these elements can be provided in a relatively compact and efficient header.

But first, let's discuss the most important features.

[Note] Considering the comments, it seems the word "container" does not properly define the objective of this specification. Another word shall be found. "Framing format" is the current candidate.

Sunday, January 8, 2012

Probability Update

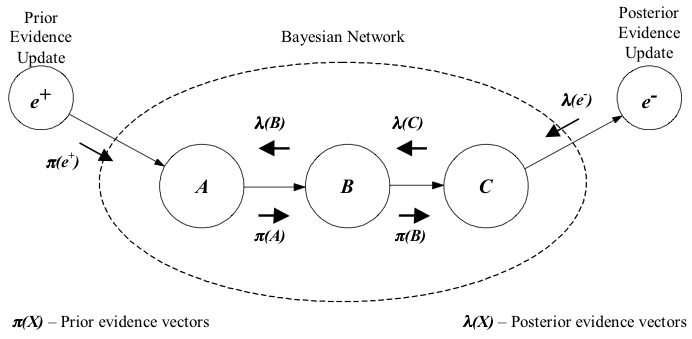

Following the previous post on Binary Arithmetic Coder, we left with a simple-to-ask but difficult-to-answer question : how to update the probability that the next value will be a 0 or a 1 ?

Indeed. Presuming that we can update P0 (probability that next value will be a zero) suppose that we accept a simple idea : the past is a strong indicator for the future.

This may not always be true, but for compression it appears to work relatively well. At this stage, we can outline the strong relationship between compression and prediction : compression ratio will only be as good as the algorithm can predict the future (the next bit in this case).

Trying to predict the future is not limited to compression, and massive amount of investment have been and are currently spent around this applied concept. In this post however, we'll keep our mind focused on compression, considering other fields only if they can help this objective.

A first simple way to evaluate P0 is to count the number of 0 and 1 previously seen.

Then, simply set : P0 = N0 / (N0+N1).

It works, and is a simple way to achieve convergence towards optimal stationary statistics.

But, in fact, no source is really static. They constantly evolve (otherwise, compression would be trivial). The probability should therefore adapt to the source, and consequently, more weight should be given to the most recent bits.

A very simple way to achieve this property is by giving to new bits a fixed share of the updated probability. For example 20%. This is an easy method to gradually and smoothly "reduce" the weight of older records.

And we get :

newP0 = oldP0 x (1-share) + NewBitIsZero x share

This works too, although we start to wonder what should be such "share" ?

20% ? 10% ?

It is very tempting to believe that the optimal adaptation share can be found as a unique value. And indeed, through brute force testings (such as Genetic Algorithms) an optimal value can be found.

[Edit : an excellent example of "optimal adaptation share" is provided by Shelwien here : http://nishi.dreamhosters.com/u/book1bwt_wr.png ]

However, the optimal value will be true only for a given file, number of contexts and precision level. Change any parameter, and the optimal value will change too..

Could such optimal adaptation share be guessed beforehand, through calculation, rather than sheer experimentation ? Well, unfortunately no. It depends too much on the source, although some basic rules of thumb do apply : if the file is small, the share will be higher. If it is large, the share will be lower.

This points towards something retrospectively obvious : at the beginning of the file, when statistics are still scarce, the adaptation must be faster. Then, the more we accumulate statistics, the more accurate they should become, so the adaptation share must become lower.

It does not answer to the question "how much", but hints towards a moving value, becoming lower as we progress into the source file.

In order to get closer to the "how much" answer, I've collected some statistics, that we'll see and comment in a later post...

Thursday, January 5, 2012

Binary Arithmetic Coder

There is a way to code an element using less than one bit of space. Although the idea might sound weird, it is in fact a proven method since a few decades now, under the name of Arithmetic Coding.

As a simple explanation, let's say that arithmetic coding is no longer about using the optimal number of bits to code an element, as does Huffman, since this method can't do better than one bit per element. We will now define "ranges", within which each element will take a share (such as 2%, 75%, etc.). This method is extremely precise, since each element will cost exactly its entropy, such as 2.53 bits (precisely, not an approximation to 2 or 3), or 0.17 bits.

For more details on how it works, i invite you to read the Wikipedia entry, which is fairly good.

The method, however, has some drawbacks. To begin with, defining the precise share of each element incurs a header cost. This situation is pretty visible with Range0, which is a block-based range coder. In order to compensate the (relatively) large size of the header, i had to accept block sizes of 128KB, which is much more than the equivalent Huff0 (16KB), resulting in a less precise entropy adaptation.

A way to remove the header cost is to not transmit any header. Then, entropy adaptation is done dynamically, by recalculating shares after each update.

Unfortunately, this method also has its costs. This time, speed is the price to pay. A lot of speed actually. Adjusting a single element requires renormalizing all others, which can be too much of a burden for a 256-element alphabet, such as a Byte.

This is where the Binary Arithmetic Coder can help.

In contrast with previous coders, this one is limited to a 2-elements alphabet, 1 or 0. It's basically a yes/no switch. With this restriction in mind, the Binary Arithmetic Coder comes with a crucial advantage : a trivial update mechanism.

A single value is enough to define the switch. It represents the share of 0, while 1 will take the rest. If 0 is very likely, the share will be high. If we believe that, on next update, the probability of 0 will be even higher, then it's enough to increase the value. Otherwise, decrease the value. That's it.