The bet was ultimately successful, and borrowed some its success from Murmurhash, most notably its test tool smHasher, the best of its kind to measure the quality of a hash algorithm. xxHash speed advantage stems from its explicit usage of ILP (Instruction Level Parallelism) to keep the multiple ALU of modern CPU cores busy.

Fast forward to 2014, the computing world has evolved a bit. Laptops, desktops and servers have massively transitioned to 64-bits, while 32-bits is still widely used but mostly within smartphones and tablets. 64-bits computing is now part of the daily experience, and it becomes more natural to create algorithms targeting primarily 64-bits systems.

An earlier demo of XXH64 quickly proved that moving to 64-bits achieves much better performance, just by virtue of wider memory accesses. For some time however, I wondered if it was a "good enough" objective, if XXH64 should also offer some additional security properties. It took the persuasion of Mathias Westerdhal to push me to create XXH64 as a simpler derivative of XXH32, which was, I believe now, the right choice.

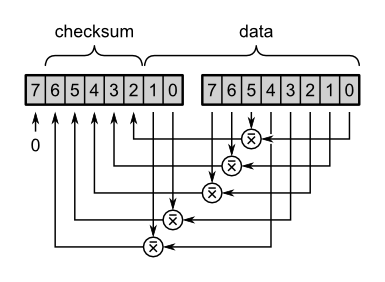

XXH64 is therefore a fairly straighfoward application of XXH methodology to 64-bits : an inner loop with 4 interleaved streams, a tail sequence, to handle input sizes which are not multiple of 32, and a final avalanche, to ensure all bits are properly randomized. The bulk of the work was done by Mathias, while I mostly provided some localized elements, such as prime constants, shift sequences, and some optimization for short inputs.

The quality of XXH64 is very good, but that conclusion was difficult to assess. A key problem with 64-bits algorithms is that it requires to generate and track too many results to properly measure collisions (you need 4 billions hashes for a 50% chance of getting 1 collision). So, basically, all tests must be perfect, ending with 0 collision. Which is the case.

Since it's a bare minimum, and not a good enough objective to measure 64-bits quality, I also starred at bias metric. The key idea is : any bit within the final hash must have a 50% chance of becoming 0 or 1. The bias metric find the worst bit which deviates from 50%. Results are good, with typical worst deviation around 0.1%, equivalent to perfect cryptographic hashes such as SHA1.

Since I was still not totally convinced, I also measured each 32-bits part of the 64-bits hash (high and low) as individual 32-bits hashes. The theory is : if the 64-bits hash is perfect, any 32-bits part of it must also be perfect. And the good thing is : with 32-bits, collision can be properly measured. The results are also excellent, each 32-bits part scoring perfect scores in all possible metric.

But it was still not good enough. We could have 2 perfect 32-bits hashes coalesced together, but being a repetition of each other, which of course would not make an excellent 64-bits hash. So I also measured "Bit Independence Criteria", the ability to predict one bit thanks to another one. On this metric also, XXH64 got perfect score, meaning no bit can be used as a possible predictor for another bit.

So I believe we have been as far as we could to measure the quality of this hash, and it looks good for production usage. The algorithm is delivered with a benchmark program, integrating a coherency checker, to ensure results remain the same across any possible architecture. It's automatically tested using travis continuous test environment, including valgrind memory access verification.

Note that 64-bits hashes are really meant for 64-bits programs. They get roughly double speed thanks to increased number of bits loaded per cycle. But if you try to fit such an algorithm on a 32-bits program, the speed will drastically plummet, because emulating 64-bits arithmetic on 32-bits hardware is quite costly.

SMHasher speed test, compiled using GCC 4.8.2 on Linux Mint 64-bits. The reference system uses a Core i5-3340M @2.7GHz

| Version | Speed on 64-bits | Speed on 32-bits |

|---|---|---|

| XXH64 | 13.8 GB/s | 1.9 GB/s |

| XXH32 | 6.8 GB/s | 6.0 GB/s |