Following the entry regarding Error detection, it is still necessary to find a correct algorithm to do the job.

In other circumstances, the solution would have been a simple reliance on CRC32. After all, this algorithm is already in use in many applications, including modem transmission (ITU-T V42), Zip, MPEG2, etc. So it looks solid.

And solid it is, but fast it is not.

As stated by Shelwien, a fast hard-wired version of CRC32 exists, within the most recent Intel CPUs. However, not only is it a limited choice in term of target CPU, it is also a different variant (i.e. incompatible) from the normalized one. Therefore, software mode cannot be avoided.

And what's the use of a very fast compression algorithm if the checksum takes much longer to be calculated ?

To be more specific, LZ4 decoder works roughly at 1GB/s on my test machine. So, to be almost negligible, a checksum algorithm would need to be ~10x faster, which would give 10GB/s. This is obviously not possible, keeping in mind that the RAM speed doesn't cope with such situation : a memcpy() operation is limited to 2.5GB/s on the very same machine.

So OK, we can't reach the ideal situation, but what would be the best possible one, i.e. the fastest one ?

This is not an easy question, since making a fast but weak checksum with very poor properties is the usual outcome. For example, sum all your bytes one by one, and here you have your checksum ! Except that its distribution is absolutely horrible, resulting in too many potentially undetected errors.

In order to assess if a checksum algorithm features some good properties, it is necessary to test them, with an appropriate tool.

And we are lucky, such a tool exists.

Austin Appleby created MurmurHash, in a bid to provide the fastest possible hash algorithm. In order to test its own algorithm, he also created SMHasher, which is an invaluable tool to assess the performance of any hash function. As can be guessed, MurmurHash answer perfectly to all criteria, but that doesn't mean that the tool is flawed, it's more a testament that MurmurHash was developed and improved along the lines of SMHasher. That being said, the methodology is sound, and results look reliable.

I immediately used the opportunity to test different checksum algorithm provided within the package. Starting with CRC32 of course. And the results are not excellent.

CRC32 speed is 430MB/s on the test system. That's not bad, but compared to LZ4 decoder, it is more than twice slower, which means the CPU would take more time to check the data rather than actually decoding it.

Furthermore, the distribution properties of CRC32 are not "best in class". Quite far from it in fact. The bit distribution is typically "biased", which means that the probably for a bit position to be 0 or 1 is not a near-perfect ~50%. Worse, bit independence is nonexistent. It fails some tests, such as the "2-bytes keyset", and the "HighBits keyset", which has devastating consequences on bit distribution.

This doesn't make CRC32 unusable. It just shows that this older algorithm is not perfect.

More importantly, as expected, it is not fast enough for our needs.

Let's move on to another candidate, FNV, which is also part of the package.

FNV (Fowler-Noll-Vo) takes a reputation of being an extremely fast hash function. Many variants have appear around the web, with the same core principles, marrying XOR with multiplications in an inner loop.

The speed is indeed better, but at 550 MB/s, it's not that great.

Furthermore, the properties of FNV are no better than CRC32, featuring the same weaknesses, plus failing badly at "Cyclic test", having poor results on "sparse keyset", and very poor on both low and high bits keyset, etc. Well, the results are so bad, i could not advise this function to anyone.

So, let's go straight to the king of the hill. The most recent version of MurmurHash (v3.a) is said to be the fastest hash function available. We are only interested in the 32-bits version, so let's put that one on the grill.

And yes, fast it is. The speed is 2.7 GB/s. This is just huge. It's close enough to my RAM speed limit. Interestingly, the speed is depending on input being aligned on 4-bytes boundaries (32 bits). If not, the speed dips to 2.0 GB/s, which is still good.

More importantly, with this speed, we get all SMHasher properties verified, with no single trace of distribution weakness. That's great. We have a candidate !

************************************************************************************

The story could have ended here. But as usual, i couldn't resist. I would be willing to put some of my own function to the test, thanks to SMHasher.

An old "fast hash" of mine would end up with the same speed as MurmurHash, but much worse properties.

That could have been the end. After all, MurmurHash is so fast, maybe it is merely limited by RAM speed after all ?

So i made another, simpler, attempt purely targeting speed. It resulted in an 8GB/s hash function. Yep. This is much more than my RAM speed, so how could it be ?

Well, in fact, it is much more than memcpy(), but there's nothing magic : in fact, memcpy() not only read input from memory, but also write to memory. This is something the hash function does not need to do. The input is simply "sunk" into registers. So, it can be that fast, and there is definitely some room left for more speed.

I therefore spent a little more time studying what makes MurmurHash such a great hash function.

I believe there are 2 keys parts :

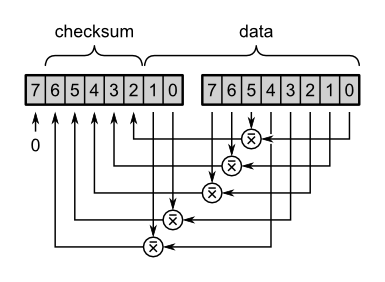

The first one "blends" the fed input bytes into registers, thanks to several simple operations, the most important of them being _rotl().

_rotl() (Rotate left) ensures that the bits that were previously high now becomes low. So it effectively nullify the problem of high bits having a small contribution to the overall hash distribution.

The second one digest the final blended result thanks to a serie of small steps known as "avalanche". It just destroys any kind of bit bias or correlation left that the blend would have left.

With both being combined, we get an almost perfect hash distribution.

So i started from there, re-using the above "fast simple hash" and improving it with the presented ideas. I ended up with a 7.5 GB/s hash function, which is about just Hellish.

It would pass all the tests except for a single one, "Keyset 'Sparse' - 2048-bit keys with up to 2 bits set". That's because the blending phase creates some "twin bits" far apart from each other, at varying distances depending on bit position. This is obviously bad for a crypto-hash, but a 32-bits checksum is anyway not meant for this task.

We simply want to detect errors, more probably transmission and storage failures, or manual glitches. For such cases, since twin bits are very far apart, it seems it would be extremely unlucky to have random errors happening just exactly on twin bits pattern. In fact, the probability seems lower than getting a collision from two completely different inputs on the 32-bits checksum. Which is the limit of this exercise.

Anyway, for the sake of it, i also created a stronger version of the algorithm, which add an operation in the inner loop to make the twin bit pattern vanish. This version reaches 4.2 GB/s, which is still good, but noticeably slower than the fast version. Well, that's the price to pay for stronger properties.

The final result of these investigation is called xxHash, and is now an open-sourced project, hosted on Google Code.

*****************************************************

(Update) : a new xxHash version has been published. It features the same diversity as xxHash_strong, while being faster, at 5.4 GB/s on the same platform (Core 2 Duo, 3 GHz). It also allows providing input in several consecutive calls, as opposed to a single memory block.