The bet was ultimately successful, and borrowed some its success from Murmurhash, most notably its test tool smHasher, the best of its kind to measure the quality of a hash algorithm. xxHash speed advantage stems from its explicit usage of ILP (Instruction Level Parallelism) to keep the multiple ALU of modern CPU cores busy.

Fast forward to 2014, the computing world has evolved a bit. Laptops, desktops and servers have massively transitioned to 64-bits, while 32-bits is still widely used but mostly within smartphones and tablets. 64-bits computing is now part of the daily experience, and it becomes more natural to create algorithms targeting primarily 64-bits systems.

An earlier demo of XXH64 quickly proved that moving to 64-bits achieves much better performance, just by virtue of wider memory accesses. For some time however, I wondered if it was a "good enough" objective, if XXH64 should also offer some additional security properties. It took the persuasion of Mathias Westerdhal to push me to create XXH64 as a simpler derivative of XXH32, which was, I believe now, the right choice.

XXH64 is therefore a fairly straighfoward application of XXH methodology to 64-bits : an inner loop with 4 interleaved streams, a tail sequence, to handle input sizes which are not multiple of 32, and a final avalanche, to ensure all bits are properly randomized. The bulk of the work was done by Mathias, while I mostly provided some localized elements, such as prime constants, shift sequences, and some optimization for short inputs.

The quality of XXH64 is very good, but that conclusion was difficult to assess. A key problem with 64-bits algorithms is that it requires to generate and track too many results to properly measure collisions (you need 4 billions hashes for a 50% chance of getting 1 collision). So, basically, all tests must be perfect, ending with 0 collision. Which is the case.

Since it's a bare minimum, and not a good enough objective to measure 64-bits quality, I also starred at bias metric. The key idea is : any bit within the final hash must have a 50% chance of becoming 0 or 1. The bias metric find the worst bit which deviates from 50%. Results are good, with typical worst deviation around 0.1%, equivalent to perfect cryptographic hashes such as SHA1.

Since I was still not totally convinced, I also measured each 32-bits part of the 64-bits hash (high and low) as individual 32-bits hashes. The theory is : if the 64-bits hash is perfect, any 32-bits part of it must also be perfect. And the good thing is : with 32-bits, collision can be properly measured. The results are also excellent, each 32-bits part scoring perfect scores in all possible metric.

But it was still not good enough. We could have 2 perfect 32-bits hashes coalesced together, but being a repetition of each other, which of course would not make an excellent 64-bits hash. So I also measured "Bit Independence Criteria", the ability to predict one bit thanks to another one. On this metric also, XXH64 got perfect score, meaning no bit can be used as a possible predictor for another bit.

So I believe we have been as far as we could to measure the quality of this hash, and it looks good for production usage. The algorithm is delivered with a benchmark program, integrating a coherency checker, to ensure results remain the same across any possible architecture. It's automatically tested using travis continuous test environment, including valgrind memory access verification.

Note that 64-bits hashes are really meant for 64-bits programs. They get roughly double speed thanks to increased number of bits loaded per cycle. But if you try to fit such an algorithm on a 32-bits program, the speed will drastically plummet, because emulating 64-bits arithmetic on 32-bits hardware is quite costly.

SMHasher speed test, compiled using GCC 4.8.2 on Linux Mint 64-bits. The reference system uses a Core i5-3340M @2.7GHz

| Version | Speed on 64-bits | Speed on 32-bits |

|---|---|---|

| XXH64 | 13.8 GB/s | 1.9 GB/s |

| XXH32 | 6.8 GB/s | 6.0 GB/s |

Awesome! This is exactly what I've been looking for.

ReplyDeleteHow it compares (speed) with CityHash128?

ReplyDeleteThey are roughly similar on this test platform (13.0 GB/s in 64 bits mode, 1.7 GB/s in 32-bits mode).

DeleteNote that Cityhash can use Intel's hardware CRC32C instruction for optimal speed (see http://en.wikipedia.org/wiki/CityHash). Depending on the presence or absence of this instruction on your test system, your mileage may vary.

Hi Yann,

ReplyDeleteRemember that you have practically built free checksum inside FSE/ANS: you start with some fixed initial state and store the final state. If something was wrong, the decoded initial state will turn out a random value instead - there is approximately 1/L probability of false positive, what can be decreased by using simultaneously a few states (interleaving).

A good entropy coder can be simultaneously used also for something much stronger: not only to decide whether the data is damaged, but also allow to repair such eventual damages (error correction).

For this purpose, add "forbidden symbol" to alphabet and rescale the rest. You don't use this symbol while encoding, but it can be accidentally used after an error while decoding - in this case decoder should go back and try a correction - developing a tree of corrections ( http://demonstrations.wolfram.com/CorrectionTrees/ ).

Best,

Jarek

Hi Jarek

DeleteThat's a good point (at least, when initial value is not used for something else, such as cheap last-symbol storage).

Of course, the detection probability is limited by the size of the table (typically 4K == 12 bits). That's less than a 32-bits checksum (4B), but if the goal is only to catch accidental errors, then it could be considered good enough.

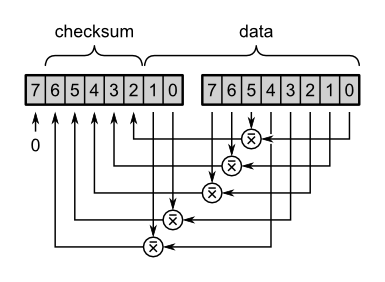

Also : when using multiple streams interleaved, it creates multiple state value which can be used to check bitstream integrity, adding their bit in the process, hence improving detection power.

Thanks for the demo. Very interesting. "forbidden symbol" imply a bit of redundancy, which play against compression efficiency. That's normal, all error correction algorithms must introduce such redundancy.

Typical error correction algorithm is usually added on top of the data to protect, so it's quite new to integrate it directly into the entropy coder. One would need a comparison to see if this method is as efficient (introduce less redundancy for the same correction power) as dedicated algorithms such as, for example, turbo-codes.

Hi Yann,

ReplyDeleteIndeed redundancy has a cost - tiny for checksum, and huge for error correction. 2^-12 false positive probability may not be sufficient for many applications (~2^-13 when the initial state is chosen as the last one).

rANS can directly operate on 64 bits states, while for tANS/FSE we could "couple" a few states, so that an error disturbs all of them. Unfortunately simple interleaving (e.g. even symbols are encoded using the first state, odd using second) is not sufficient - e.g. a single bit damage affects only a single state (and bit synchronization, but it's just an order of magnitude). A cheap coupling to prevent that is e.g. (sometimes?) encoding XOR of current symbol with the previous one.

Regarding correction trees, it is basically so called sequential decoding used for convolutional codes, but with a more sophisticated coding scheme. I have worked later on it and for large states and bidirectional correction it can easily outperform turbo codes and most of LDPCs - here is implementation and preprint: https://indect-project.eu/correction-trees/

However, for ANS we cannot use bidirectional correction, the code is nonsystematic and tANS has relatively small state - the performance would be slightly worse (~turbo codes level). But it is cheap.

Another nearly free option that can be added to tANS based compressors is encryption - e.g. just slightly perturb symbol spread accordingly to cryptographic key.

Among error correction algorithms, there is a large difference between algorithms which can detect and correct a bit flip, and those which also successfully manage to correct a missing or additional bit.

DeleteThe second category is very important within transmission protocols, since missing/added bits are consequences of small synchronization errors, and I was learned in school that they are solved using convolutive codes. Such codes tend to have sizable redundancy though.

Does your method belong to the first (bit flip only) or second category ?

Indeed, due to fixed block structure, synchronization errors are nearly impossible to deal with for DLPC and turbo codes. However, for sequential decoding such errors are just additional local corrections to consider - types of branches in correction tree. Then, the regularly distributed checksums, with large probabilities cuts off wrong branches.

ReplyDeleteSo if we operate e.g. on 8 bit blocks, there are 256 possible bit flips to consider, with rapidly dropping probability (we will develop the tree a lot until considering scenario with all bits flipped).

To add e.g. deletion errors, we just add them to the set of local scenarios to consider with corresponding probabilities - starting e.g. with taking 7 bits and inserting 1 bit in all possible positions.

The biggest issue here is that different corrections can lead to the same sequence, increasing complexity of decoder.

I think I could write a practical decoder for reasonable parameters, close to (unknown!) capacity ...

I believe that if you can achieve such objective, it will be a great breakthrough, opening perspectives for transmission scenarios.

Delete1/256th is really a very small overhead, you can easily increase it to 1/16th, it's still reasonable for error correction purpose.

One thing I'm still wondering a bit : an error is detected when reaching a forbidden symbol. OK. But what about an error at the end of the bitstream, which, by luck, never reach a forbidden symbol ? I guess we can still use the "end of bitstream state number" to detect it, but does that affect the way the error is corrected ?

The overhead depends on noise level - probability of forbidden symbol is the probability of detection that we are on a wrong correction, but the cost of increasing this probability is rate reduction.

DeleteThis cost can be huge when there is large space of possible damages - if each length N sequence can be damaged in 2^k ways, you can transmit at most 2^{N-k} possibilities ("codewords"), getting rate limit (N-k)/N.

Indeed the final state should be also written (protected) for final confirmation. And having it, we could try to simultaneously develop second tree: in backward direction and finally try to meet both trees (bidirectional correction in linked implementation). However, ANS does not allows for decoding in both directions ...

As correction trees seems the most promising approach e.g. for deletion channel, maybe I will write a decoder after finishing my current project ...

Done: https://github.com/JarekDuda/DeletionChannelPracticalCorrection

DeleteThere are still places for improvements (bidirectional correction), but it has already 2-3 times better rate than some LDPC-based I have found.

It looks great Jarek !

DeleteYour coding style is very clean too.

Could you please define what you mean by "2-3 times better rate than LDPC" ? As far as I know, LDPC can be made arbitrarily close to the Shannon Limit, so it's difficult for me to understand how it can be beaten by such a margin, but maybe you use a different metric.

Another area of attention is also computation time. LDPC is winning design contests since a decade thanks to its lower computation cost (compares with Turbo Codes). So for ANS-based correction to become an effective competitor, it will also have to fare favorably into this area.

I'll probably have a deeper look at your code in the next few days, and probably start by building some testing capabilities around it. It will be useful to properly assess its effectiveness and find some potential remaining bugs or limits.

Best Regards

Hi Yann,

DeleteThanks for the comment about my coding style, but I know that I should use longer variables. I have to admit that my direct motivation for this implementation was practicing C++ as a few weeks ago I didn't get research position at Google because of poor C++ skill ...

Regarding LDPC, it is not well suited for synchronization errors. Look at page 8 of http://www.eecs.harvard.edu/~chaki/doc/code-long.pdf - their 0.2333 rate code breaks for p ~ 0.07-0.08 ... while my implementation still works above it for rate 0.5 - can transmit more than twice more through the same channel (and can still be improved by adding bidirectional correction).

Regarding speed, the "nodes" in the table is practically linear coefficient for decoding time - 1 means just decoding (one table use per byte). So for low noise level (everyday use) such correction is extremely fast and cheap. Sometimes, accidentally the damage is serious - in this case you can usually still correct, but it becomes really costly.

Regarding ANS - the basic concept was indeed for this coding, however I have replaced it with a more appropriate one for correction purpose in the previous paper: which allows for bidirectional correction, which is systematic (not corrected regions maintain the channel's noise) and quickly uses large state (64 bit).

If artificially increasing the number of ANS states (... ot using rANS), it should get similar frame rate as this implementation (forward only) - the advantage would be smooth control of code rate (by probability of forbidden symbol).

Best Regards,

Jarek

For a 32 bit hash on 64 bit programs, couldn't you then call the 64 bit hash and drop the lower 32 bits? Would that not give you higher speed than the 32 bit algorithm? If so, perhaps it should just do that by default.

ReplyDeleteYes Sebastian, you are totally right;

Deleteyou can extract any part of the 64-bits hash to create a n-bits hash of good quality. You could even use this opportunity to create several n-bits hash in a single row (bloom filter use case comes to mind).

> perhaps it should just do that by default

Note that 64-bits hash is fastest only if you are sure your program is 64-bits. If your program works sometimes in 32-bits mode, this doesn't work anymore : 64-bits arithmetic will kill performance.

This is for example the case for LZ4 error detection : the algorithm must generate an identical checksum on a variety of systems, some 32-bits, some 64-bits. All must generate the same checksum, therefore the algorithm must be the same.

It's a matter of use case, which is under complete programmer's control. The library can't guess such situations automatically, and therefore offer both 32-bits & 64-bits variants to let the programmer choose.

Any plans on building a 128bit version?

ReplyDeleteNo, as there is no demand for it.

Deletea 128 bits version could be created using the same core engine as XXH64, but a different final avalanche. The speed would be approximately equivalent.

umac-based hash is much faster on 32-bit cpus, has quality guaranteed by its cryptographic (MAC) roots and can be extended to any size by hashing multiple times with different key. its only drawback is much larger code since it employs AES for key stream generation

ReplyDeletefor each 64-bit of input data it performs the following computation:

sum += (data0+key0)*(data1+key1)

where data/key are 32-bit values and multiplication/sum are 64-bit

even without SIMD, these are only 1-2 cpu ticks consuming 64 bits of input data and producing 32-bit result (higher bits of sum). and with SSE2/AVX2 it's 4 cpu ticks consuming 32/64 input bytes and producing 32-bit result

awesome work and explained elegantly. Do you have any plan to release XXH64 (java version) from https://github.com/jpountz/lz4-java git repo? I dont see XXH64 in latest version (1.2)

ReplyDeleteYou'll probably have a better chances to get an answer by putting your request directly in the lz4-java issue board. This is Adrien's work, he's the only to provide you an answer.

DeleteYou measured collisions taking just the lower 32 bits, and just the upper 32 bits. I suggest also measuring collisions taking the 32 even numbered bits and the 32 odd numbered bits. That would give you a pretty good "matrix".

ReplyDeleteI made a few 32-bits extractions of the 64-bit hash, they all worked well.

DeleteI believe the most important test is in fact the "Bit Independence Criteria", which ensures that no bit pattern can be "guessed" from another bit. On this measure, I got a maximum variation < 0.3% from a strict 50% error rate, which is equivalent to a perfect noise.

Hi,

ReplyDeleteHow to install xxhash on centos and how to test the file and folder.

Thanks,

Chellasundar

In the "dev" branch, there is an installer :

Deletesudo make install

Then,

man xxhsum

for detailed instructions.

If you just want to test, you don't necessarily have to install.

You can just do :

make

in the master branch.

[root@localhost Digest-xxHash-2.03]# make

Deletemake: *** No targets specified and no makefile found. Stop.

We are unable to make a file.

We need to install any RPM package.

No manual entry for xxhsum

Delete